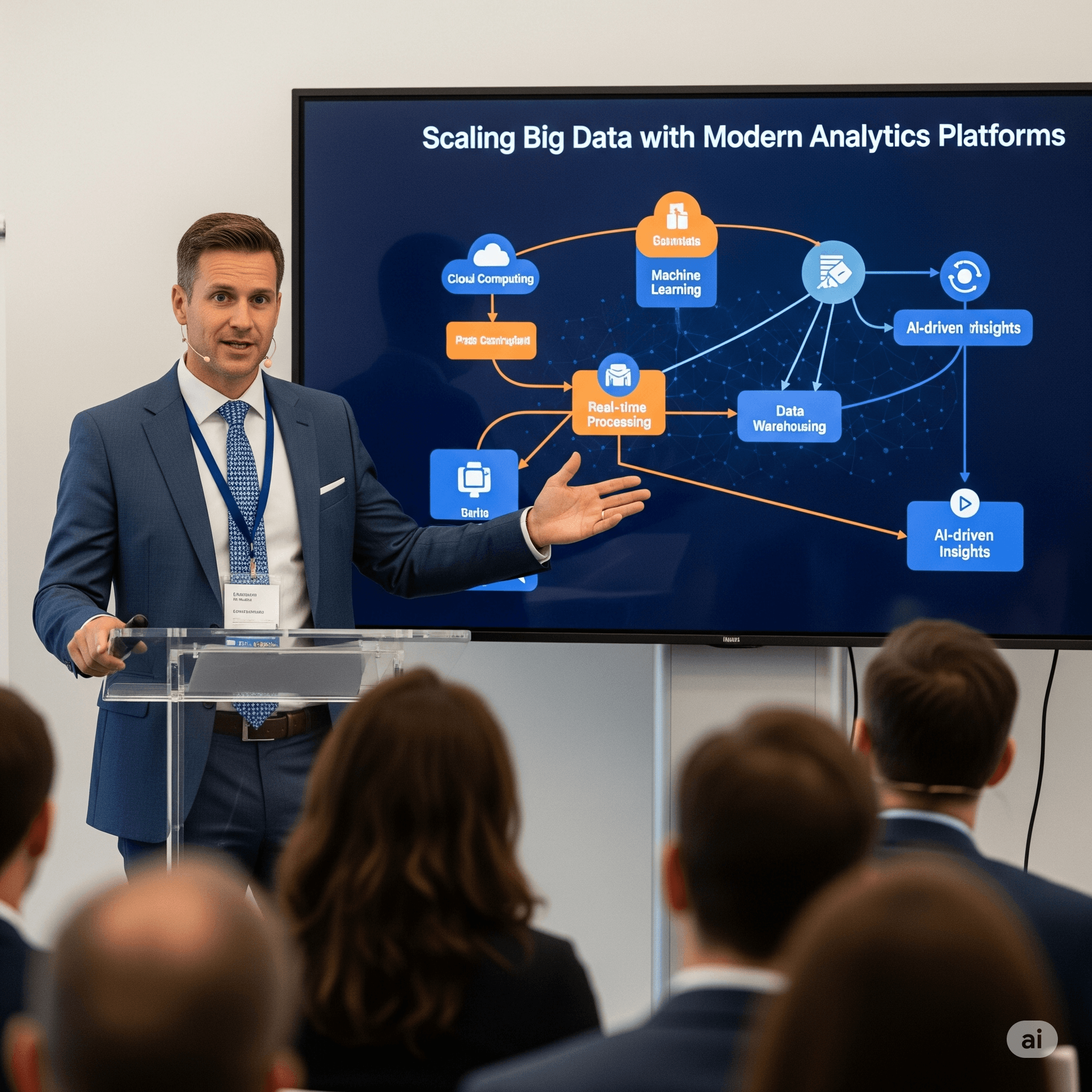

Scaling Big Data with Modern Analytics Platforms

Discover how modern analytics platforms enable organizations to scale big data solutions through advanced architectures, AI-powered automation, and flexible deployment strategies that deliver high-performance insights across massive datasets.

Introduction

Understanding Modern Big Data Scaling Challenges

Today's big data scaling challenges extend far beyond traditional volume, velocity, and variety considerations to encompass complex requirements for personalization, governance, security, and user experience across distributed architectures. Organizations must address the challenge of providing flexible, self-service platforms that empower users regardless of technical expertise while maintaining centralized governance and security controls across multi-cloud and hybrid environments. The complexity involves managing diverse data types including structured, semi-structured, and unstructured data while ensuring real-time processing capabilities, maintaining data quality, and supporting concurrent access from thousands of users with varying analytical needs.

Scaling Paradigm Shift

Modern big data scaling focuses on delivering personalized, adaptable analytics environments that meet diverse user needs throughout their data journey, rather than simply handling large volumes of queries or supporting numerous concurrent users.

- Multi-Tenant Requirements: Supporting multiple clients, departments, or business units from a single platform while maintaining data isolation and customization

- Self-Service Analytics: Enabling business users to access and analyze data without requiring extensive technical expertise or IT support

- Real-Time Processing: Handling streaming data and providing instant insights for time-sensitive business decisions and operational monitoring

- Cross-Platform Integration: Seamlessly connecting diverse data sources, processing engines, and visualization tools across different technology stacks

- Elastic Resource Management: Automatically scaling computing and storage resources based on demand while optimizing costs and performance

Architecture Principles for Scalable Analytics

Effective big data scaling requires architectural designs that prioritize horizontal scaling through distributed systems, microservices architectures, and cloud-native components that can handle increasing data volumes while maintaining high performance and reliability. Key architectural principles include data partitioning across multiple machines, stateless architecture design for easier scaling, elastic cloud environments with auto-scaling capabilities, and distributed storage systems with replication and fault tolerance. Modern scalable architectures also incorporate caching strategies, load balancing mechanisms, and disaster recovery capabilities to ensure consistent performance and availability even during peak loads or system failures.

| Architecture Component | Scaling Strategy | Implementation Approach | Business Benefits |

|---|---|---|---|

| Distributed Storage | Horizontal partitioning and replication across nodes | Data lakes, distributed file systems, cloud storage services | Unlimited scalability, high availability, cost optimization |

| Processing Engines | Parallel processing and in-memory computing | Apache Spark, distributed SQL engines, stream processing | Faster query performance, real-time analytics, efficient resource utilization |

| Container Orchestration | Microservices and containerized deployments | Kubernetes, Docker, serverless functions | Flexible deployment, automatic scaling, operational efficiency |

| Caching Systems | Multi-layered caching strategies | Redis, Memcached, distributed caches | Improved response times, reduced infrastructure load, better user experience |

Modern Analytics Platform Capabilities

Modern analytics platforms provide comprehensive capabilities that address the full data analytics lifecycle from ingestion and processing to visualization and governance while supporting diverse user personas and technical requirements. These platforms integrate seamlessly with existing BI tools and data platforms, whether deployed on cloud or on-premises, enabling organizations to leverage current infrastructure investments while gaining advanced analytics capabilities. Key capabilities include AI-powered smart aggregation technology for lightning-fast query performance, semantic layers that democratize data access for non-technical users, and unified governance frameworks that maintain data consistency and security across distributed environments.

Platform Integration Benefits

Modern analytics platforms integrate directly with all major BI tools and data platforms, providing a semantic performance layer that bridges the gap between complex data sources and business users while delivering instant insights on massive datasets without data movement.

Multi-Tenant Architecture and Customization

Multi-tenant architecture enables organizations to support multiple clients, departments, or business units from a single analytics platform while providing each tenant with customized analytics environments tailored to their specific needs and requirements. This architectural approach allows each tenant to receive individualized data models, metrics, dashboards, and user interfaces while maintaining centralized administration and governance controls. For example, SaaS providers can offer personalized analytics for hundreds of clients through a single administrative layer, while global enterprises can define corporate-level metrics that are automatically distributed to regional offices while allowing local customization.

AI-Powered Optimization and Automation

Artificial intelligence and machine learning capabilities are integral to modern big data scaling, providing automated resource optimization, intelligent query acceleration, and predictive analytics that enhance both system performance and user experience. AI-powered smart aggregation technology optimizes query performance on platforms like Snowflake, RedShift, and BigQuery by automatically creating and managing pre-computed aggregations that accelerate analytical workloads. Advanced analytics techniques including machine learning, data mining, and predictive modeling extract valuable insights from complex datasets while automated anomaly detection identifies unusual patterns that may indicate opportunities or risks requiring immediate attention.

- Intelligent Query Optimization: AI algorithms that automatically optimize query execution plans and resource allocation for maximum performance

- Predictive Scaling: Machine learning models that anticipate resource needs and scale infrastructure proactively based on usage patterns

- Automated Data Management: AI-driven data lifecycle management, quality monitoring, and governance enforcement across large datasets

- Smart Aggregation: Intelligent pre-computation and caching of frequently accessed data combinations to accelerate query response times

- Anomaly Detection: Advanced algorithms that identify unusual patterns, outliers, and trends in massive datasets for proactive decision-making

Semantic Layers and Data Democratization

Semantic layers play a crucial role in democratizing data access by simplifying complex data structures and translating them into business-friendly terms that enable non-technical users to interact with data through self-service analytics and conversational interfaces. A centralized semantic layer ensures that core metrics, data definitions, and business rules remain consistent across all tenants and environments while enabling local customization and interpretation. This approach eliminates data silos and inconsistencies across departments while providing a single source of truth for accurate decision-making, enabling business users to query data using natural language and familiar business terminology.

Cloud-Native and Hybrid Deployment Strategies

Modern analytics platforms provide flexible deployment options including cloud-native, on-premises, and hybrid architectures that enable organizations to choose optimal deployment models based on data sovereignty, compliance, and performance requirements. Deploy-anywhere capabilities allow organizations to maintain consistent analytics experiences across multiple cloud providers, on-premises infrastructure, or hybrid combinations while ensuring data governance and security requirements are met. This flexibility is particularly important for global organizations that must comply with varying data privacy regulations while maintaining performance and user experience standards across different regions and jurisdictions.

| Deployment Model | Use Cases | Advantages | Considerations |

|---|---|---|---|

| Cloud-Native | Rapid scaling, global accessibility, cost optimization | Elastic resources, managed services, reduced operational overhead | Data sovereignty, compliance requirements, network latency |

| On-Premises | Data sovereignty, regulatory compliance, security requirements | Complete control, customization flexibility, compliance assurance | Infrastructure investment, operational complexity, scaling limitations |

| Hybrid Cloud | Data residency requirements, phased migration, risk mitigation | Flexibility, gradual transition, optimal resource placement | Complexity management, integration challenges, security coordination |

| Multi-Cloud | Vendor diversification, best-of-breed services, risk distribution | Avoided vendor lock-in, service optimization, global coverage | Management complexity, skills requirements, cost optimization |

Real-Time Analytics and Stream Processing

Real-time analytics capabilities are essential for modern big data platforms, enabling organizations to process streaming data and provide immediate insights for time-sensitive business decisions and operational monitoring. Stream processing architectures handle continuous data flows from IoT devices, social media feeds, transaction systems, and operational sensors while maintaining low latency and high throughput requirements. Advanced stream processing platforms provide complex event processing, real-time aggregations, and immediate alerting capabilities that enable organizations to respond quickly to changing conditions and emerging opportunities.

Real-Time Processing Benefits

Real-time analytics enables organizations to detect fraudulent transactions within milliseconds, optimize supply chain operations based on current conditions, and provide personalized recommendations that adapt to customer behavior in real-time.

Analytics as Code and DevOps Integration

Analytics as Code represents an advanced approach to managing analytics assets including metrics, dashboards, and data models through version control systems and automated deployment pipelines. This methodology enables teams to define standardized KPIs and analytics components as code, deploy them consistently across multiple tenants and environments, and maintain governance standards while supporting agile development practices. DevOps integration ensures that analytics development follows software engineering best practices including continuous integration, automated testing, version control, and collaborative development workflows.

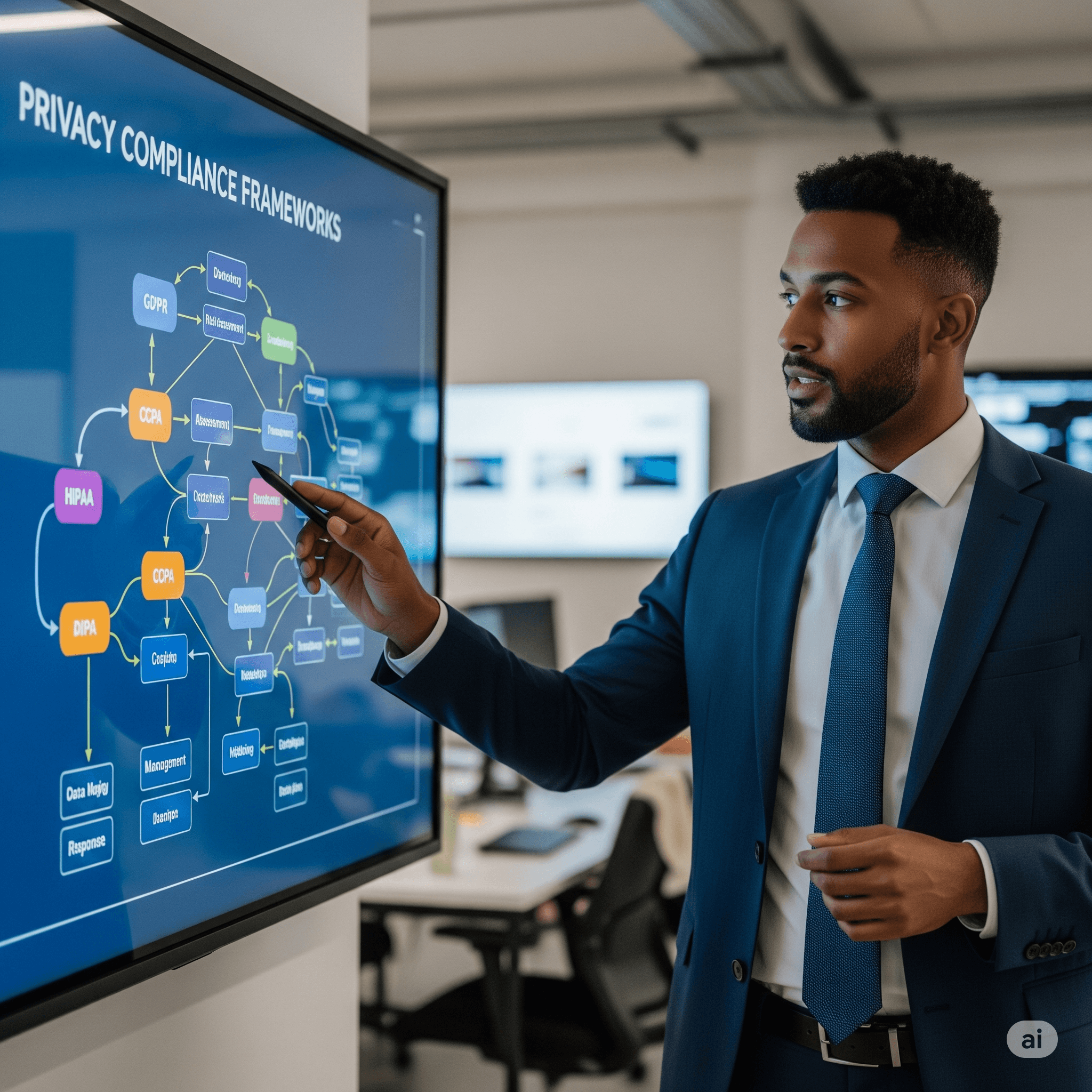

Data Security and Governance at Scale

Scaling big data analytics requires comprehensive security and governance frameworks that protect sensitive data while enabling appropriate access and usage across diverse user communities and deployment environments. Data security at scale involves implementing tenant data isolation, encryption at rest and in transit, role-based access controls, and compliance with regulations such as ISO, SOC2, GDPR, and HIPAA. Governance frameworks ensure data quality, lineage tracking, policy enforcement, and audit capabilities that maintain data integrity and regulatory compliance as analytics operations scale across global organizations.

- Data Isolation: Secure separation of tenant data and analytics environments to prevent unauthorized access and maintain privacy

- Access Control: Role-based permissions and dynamic access policies that adapt to user roles, context, and data sensitivity levels

- Compliance Automation: Automated enforcement of regulatory requirements and policy compliance across distributed analytics environments

- Audit and Lineage: Comprehensive tracking of data usage, transformations, and access patterns for governance and regulatory reporting

- Encryption and Privacy: End-to-end encryption and privacy-preserving techniques that protect sensitive data throughout the analytics lifecycle

Performance Optimization Strategies

Performance optimization for large-scale analytics requires sophisticated strategies that address query acceleration, resource utilization, and user experience while managing costs effectively. Modern platforms employ intelligent caching mechanisms, pre-computation strategies, and query optimization techniques that deliver sub-second response times even for complex analytical queries across massive datasets. Advanced techniques include columnar storage formats, data compression algorithms, partitioning strategies, and in-memory processing that maximize performance while minimizing resource consumption and infrastructure costs.

Cost Optimization and Resource Management

Effective big data scaling requires intelligent cost optimization strategies that balance performance requirements with budget constraints through automated resource management and usage-based pricing models. Cloud-native platforms provide elastic scaling capabilities that automatically adjust compute and storage resources based on workload demands, ensuring optimal performance during peak periods while minimizing costs during low-usage times. Advanced cost optimization includes workload scheduling, resource pooling, and intelligent data tiering that places frequently accessed data on high-performance storage while archiving less-used data to cost-effective storage options.

| Optimization Strategy | Implementation Approach | Cost Impact | Performance Benefits |

|---|---|---|---|

| Elastic Scaling | Auto-scaling groups and serverless functions | Pay-per-use pricing, reduced idle costs | Optimal resource allocation, consistent performance |

| Data Tiering | Automated data lifecycle management | Storage cost optimization, archival efficiency | Fast access to active data, optimized storage performance |

| Workload Optimization | Intelligent scheduling and resource pooling | Improved utilization rates, reduced infrastructure needs | Better resource efficiency, predictable performance |

| Query Acceleration | Caching, pre-computation, optimization algorithms | Reduced compute requirements, faster execution | Sub-second response times, improved user experience |

Industry Use Cases and Success Stories

Leading organizations across various industries have successfully implemented modern analytics platforms to achieve significant improvements in query performance, cost efficiency, and user adoption while scaling their big data capabilities. Healthcare providers leverage predictive analytics for patient care optimization, financial services implement real-time fraud detection systems, and retail organizations use personalized recommendation engines that process millions of customer interactions daily. These implementations demonstrate measurable business value including reduced operational costs, improved decision-making speed, and enhanced customer experiences through data-driven insights.

Implementation Success

Organizations implementing modern analytics platforms report query performance improvements of 10-100x, cost reductions of 40-60%, and user adoption increases of 200-300% compared to traditional big data solutions.

Technology Stack and Platform Selection

Selecting appropriate technology stacks and platforms for scalable big data analytics requires careful evaluation of organizational requirements, technical capabilities, and strategic objectives. Modern analytics platforms integrate multiple components including distributed storage systems, processing engines, visualization tools, and governance frameworks while supporting diverse deployment options and integration requirements. Key selection criteria include scalability characteristics, integration capabilities, cost structures, vendor ecosystem support, and alignment with existing technology investments and skills.

Future Trends and Emerging Technologies

The future of big data analytics scaling will be shaped by emerging technologies including quantum computing, edge analytics, autonomous data management, and advanced AI capabilities that further automate and optimize analytical workloads. Quantum computing promises to solve complex optimization problems that are computationally intensive for classical systems, while edge analytics brings processing closer to data sources for reduced latency and improved real-time capabilities. Autonomous data management systems will reduce operational overhead through self-optimizing databases, automated data quality management, and intelligent resource allocation that adapts to changing workload patterns.

- Quantum Analytics: Leveraging quantum computing for complex optimization, machine learning, and cryptographic operations on massive datasets

- Edge Intelligence: Distributed analytics processing at edge locations for real-time insights and reduced data transfer requirements

- Autonomous Systems: Self-managing analytics platforms that automatically optimize performance, costs, and user experience without human intervention

- Augmented Analytics: AI-powered analytics that automatically discovers insights, generates narratives, and recommends actions for business users

- Federated Learning: Collaborative machine learning across distributed datasets while maintaining data privacy and sovereignty requirements

Implementation Best Practices and Roadmap

Successful big data analytics scaling requires a structured implementation approach that begins with clear strategy definition, progresses through pilot projects and proof-of-concepts, and evolves toward enterprise-wide deployment with comprehensive governance and optimization. Best practices include starting with well-defined use cases that demonstrate clear business value, ensuring data quality and governance foundations are established early, and building organizational capabilities through training and change management programs. Implementation roadmaps should prioritize quick wins that build momentum while establishing scalable architectures that support long-term growth and evolution.

Measuring Success and ROI

Measuring the success and return on investment of big data scaling initiatives requires comprehensive metrics that capture both technical performance improvements and business value outcomes. Key performance indicators include query response time improvements, infrastructure cost reductions, user adoption rates, and business impact measurements such as revenue increases, cost savings, and decision-making acceleration. Organizations should establish baseline measurements before implementation and continuously monitor progress to optimize platform performance and demonstrate ongoing value to stakeholders.

| Success Category | Key Metrics | Measurement Methods | Target Improvements |

|---|---|---|---|

| Technical Performance | Query response times, system throughput, availability | Performance monitoring tools, benchmarking studies | 10-100x faster queries, 99.9% uptime |

| Cost Optimization | Infrastructure costs, operational expenses, TCO | Financial analysis, cost tracking systems | 40-60% cost reduction, improved ROI |

| User Adoption | Active users, self-service usage, satisfaction scores | Usage analytics, user surveys, feedback systems | 200-300% increase in user adoption |

| Business Impact | Revenue growth, decision speed, competitive advantage | Business metrics tracking, outcome measurement | Measurable business value, strategic advantage |

Conclusion

Scaling big data with modern analytics platforms represents a fundamental transformation from traditional infrastructure-focused approaches to intelligent, user-centric systems that combine technical scalability with personalized experiences, self-service capabilities, and robust governance. Success requires holistic platform design that integrates AI-powered optimization, multi-tenant architectures, flexible deployment options, and comprehensive security frameworks while maintaining focus on user empowerment and business value creation. Organizations that embrace modern analytics platforms achieve significant competitive advantages through faster decision-making, improved operational efficiency, and enhanced ability to derive actionable insights from their data assets while building scalable foundations for future growth. The future of big data analytics lies in platforms that seamlessly blend advanced technical capabilities with intuitive user experiences, enabling organizations to democratize data access while maintaining enterprise-grade security, governance, and performance at any scale. As data volumes continue to grow exponentially and business requirements become more sophisticated, the organizations that invest in modern, scalable analytics platforms will be best positioned to transform their data into strategic competitive advantages that drive innovation, growth, and success in the digital economy.

Reading Progress

0% completed

Article Insights

Share Article

Quick Actions

Stay Updated

Join 12k+ readers worldwide

Get the latest insights, tutorials, and industry news delivered straight to your inbox. No spam, just quality content.

Unsubscribe at any time. No spam, ever. 🚀