The Future of Cloud-Native Development

Explore the future of cloud-native development and its transformative impact on modern software engineering through serverless architectures, edge computing, AI integration, and advanced container orchestration that enable scalable, resilient, and cost-effective applications.

Introduction

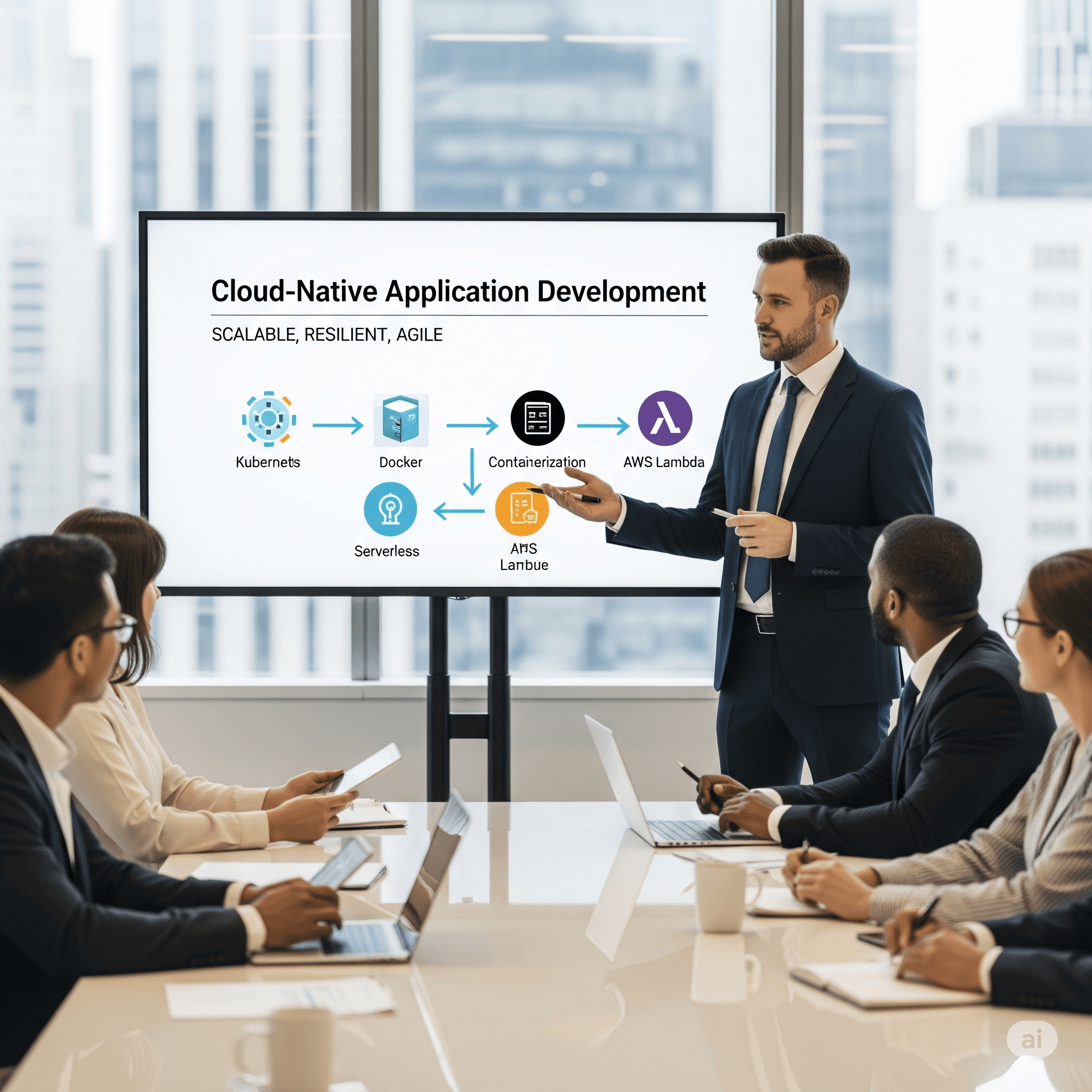

Evolution of Cloud-Native Architecture

Cloud-native architecture has evolved from simple cloud deployments to sophisticated, distributed systems that embody the core principles of scalability, resilience, and adaptability through microservices-based design, containerization, and automation. The foundation of cloud-native applications rests on breaking down monolithic structures into smaller, independent services that communicate through well-defined APIs, enabling teams to update, test, and scale individual components without affecting the entire system. This architectural transformation enables organizations like Netflix to automatically scale resources to accommodate millions of simultaneous users during peak traffic events, while companies like Spotify can independently update and scale each service component such as user profiles, playlists, and recommendations for improved efficiency and reliability.

Architectural Benefits

Cloud-native applications can scale automatically to meet demand while maintaining resilience through distributed design, enabling organizations to handle varying workloads without manual intervention and continue operating even if some components fail.

- Microservices Architecture: Breaking applications into smaller, independent services that communicate through APIs for greater adaptability and simplified maintenance

- Containerization: Packaging applications with their dependencies into portable units that ensure consistency across development, testing, and production environments

- Orchestration: Using platforms like Kubernetes to automate deployment, scaling, and management of containerized applications across distributed infrastructure

- API-First Design: Building applications around well-defined interfaces that enable seamless integration and interoperability between services

- DevOps Integration: Embracing continuous integration and deployment practices that automate and streamline the software delivery process

Serverless Computing and Function-as-a-Service

Serverless computing represents the next evolution in cloud-native development, enabling developers to build and run applications without managing underlying infrastructure while cloud providers handle scaling, maintenance, and resource allocation automatically. This approach allows developers to focus solely on writing code while benefiting from automatic scaling, reduced operational overhead, and cost optimization through event-driven, pay-per-execution pricing models. Organizations implementing serverless architectures can achieve significant cost savings and operational efficiency improvements, such as media streaming platforms using serverless functions for video transcoding that dynamically scale processing resources based on demand while optimizing costs and ensuring smooth playback experiences.

| Serverless Application | Implementation Approach | Business Benefits | Use Case Examples |

|---|---|---|---|

| Event-Driven Processing | Functions triggered by events like file uploads, API calls, or data changes | Automatic scaling, cost optimization, reduced infrastructure management | Image processing, data transformation, real-time analytics |

| API Backend Services | Serverless functions handling API requests and business logic | Pay-per-request pricing, infinite scaling, faster development cycles | E-commerce backends, authentication services, payment processing |

| Data Processing Pipelines | Serverless workflows for batch and stream processing | Cost-effective processing, automatic resource allocation, fault tolerance | ETL operations, log processing, machine learning pipelines |

| IoT Data Ingestion | Serverless endpoints collecting and processing device data | Elastic scaling, real-time processing, reduced operational complexity | Smart city sensors, industrial monitoring, healthcare devices |

Edge Computing Integration and Real-Time Processing

Edge computing integration with cloud-native architectures brings computation and data storage closer to data sources, enabling real-time processing and reducing latency for applications requiring immediate response times. This trend complements cloud-native development by distributing processing capabilities across edge locations while maintaining centralized orchestration and management through cloud platforms. Organizations implementing edge computing solutions, such as autonomous vehicle manufacturers deploying AI models at the edge to process sensor data in real-time, can achieve reduced latency and enhanced system responsiveness without relying solely on centralized cloud servers.

Edge Computing Benefits

Edge computing enables real-time decision-making for industries such as IoT, autonomous vehicles, and smart cities by processing data closer to users and reducing the load on central cloud servers while maintaining cloud-native scalability and management.

AI and Machine Learning Integration

The integration of artificial intelligence and machine learning capabilities into cloud-native applications is becoming increasingly prevalent, enabling intelligent automation, predictive analytics, and enhanced decision-making that drives innovation across various industries. Cloud-native platforms provide the scalability and flexibility required for AI/ML workloads, allowing organizations to deploy machine learning models as containerized microservices that can scale independently based on demand. Retail analytics companies exemplify this trend by integrating AI into cloud-native applications to personalize customer recommendations in real-time, analyzing shopping behavior and trends using machine learning algorithms deployed on Kubernetes clusters to enhance customer satisfaction and boost sales conversions.

Kubernetes Evolution and Container Orchestration

Kubernetes continues to evolve as the de facto standard for container orchestration, extending its reach beyond traditional application deployment to cover machine learning workloads, serverless deployments, and edge computing scenarios. Modern Kubernetes implementations provide sophisticated orchestration capabilities including automated scaling, self-healing, service discovery, and rolling updates that enable organizations to manage complex distributed applications with minimal operational overhead. Kubernetes-native platforms and tools are becoming integral to cloud-native development, offering enterprise-grade features for security, networking, and observability that support production workloads at scale.

- Advanced Scheduling: Intelligent workload placement based on resource requirements, constraints, and optimization policies

- Multi-Cluster Management: Coordinated deployment and management across multiple Kubernetes clusters for resilience and scalability

- GitOps Integration: Declarative configuration management through Git repositories for improved security and auditability

- Service Mesh Integration: Native support for service mesh technologies like Istio and Linkerd for advanced networking and security

- Observability Enhancement: Built-in monitoring, logging, and tracing capabilities for comprehensive application visibility

Multi-Cloud and Hybrid Cloud Strategies

Organizations are increasingly adopting multi-cloud and hybrid cloud strategies to avoid vendor lock-in, ensure redundancy, and optimize costs while leveraging cloud-native tools and practices that support seamless interoperability between different cloud environments. These strategies provide flexibility and resilience by distributing workloads across multiple cloud providers and on-premises infrastructure, enabling organizations to choose the best platform for specific workloads while maintaining consistent development and operational practices. Global financial institutions exemplify this approach by adopting hybrid cloud strategies to maintain regulatory compliance while leveraging public cloud services for scalability, using container orchestration tools like Kubernetes across multiple cloud providers to ensure data sovereignty and resilience.

Multi-Cloud Complexity

While multi-cloud strategies provide flexibility and avoid vendor lock-in, they require sophisticated tooling and practices to manage complexity, ensure security consistency, and maintain operational efficiency across different cloud environments.

Service Mesh and Advanced Networking

Service mesh technologies like Istio and Linkerd are becoming integral components of cloud-native architectures, providing advanced networking capabilities including traffic management, security, and observability for microservices communication. These technologies enable developers to build reliable and resilient applications by abstracting complex networking concerns and providing features such as load balancing, circuit breaking, mutual TLS encryption, and distributed tracing. Service mesh implementation allows organizations to manage microservices communication at scale while maintaining security, reliability, and observability across distributed applications without requiring changes to application code.

Enhanced Security and Zero Trust Architecture

The future of cloud-native development emphasizes enhanced security through Zero Trust architecture principles, AI-powered security automation, and comprehensive compliance frameworks that address the unique challenges of distributed, containerized applications. Zero Trust security models assume no implicit trust and require verification for every user, device, and network component, particularly important for cloud-native applications that span multiple environments and infrastructure layers. Advanced security practices include container image scanning, runtime threat detection, policy-as-code implementation, and automated compliance monitoring that ensure security is embedded throughout the development and deployment lifecycle.

| Security Component | Implementation Method | Cloud-Native Benefits | Key Technologies |

|---|---|---|---|

| Container Security | Image scanning, runtime protection, vulnerability management | Automated threat detection, policy enforcement, compliance validation | Twistlock, Aqua Security, Falco, OPA |

| Network Security | Service mesh encryption, network policies, microsegmentation | Zero-trust networking, traffic encryption, granular access control | Istio, Linkerd, Calico, Cilium |

| Identity Management | RBAC, service accounts, workload identity, secrets management | Fine-grained access control, automated credential rotation, audit trails | Kubernetes RBAC, Vault, SPIFFE/SPIRE |

| Compliance Automation | Policy-as-code, automated scanning, continuous compliance monitoring | Reduced compliance burden, consistent policy enforcement, audit readiness | Open Policy Agent, Gatekeeper, Falco |

Observability and Application Performance Monitoring

Advanced observability capabilities are becoming essential for cloud-native applications, providing comprehensive monitoring, logging, and tracing across distributed microservices architectures to ensure performance, reliability, and rapid issue resolution. Modern observability platforms integrate metrics, logs, and traces to provide holistic visibility into application behavior, infrastructure performance, and user experience across complex, distributed systems. Organizations implementing comprehensive observability solutions can quickly identify performance bottlenecks, troubleshoot issues across microservices boundaries, and optimize application performance through data-driven insights and automated alerting mechanisms.

Developer Experience and Platform Engineering

The future of cloud-native development emphasizes improved developer experience through platform engineering initiatives that provide self-service infrastructure, automated workflows, and developer-friendly tools that abstract complexity while maintaining flexibility and control. Platform engineering teams create internal developer platforms that standardize cloud-native practices, provide golden paths for common use cases, and enable developers to focus on business logic rather than infrastructure concerns. These platforms typically include features such as automated CI/CD pipelines, infrastructure-as-code templates, monitoring and alerting integration, and self-service deployment capabilities that accelerate development velocity while maintaining security and operational standards.

Developer Productivity

Internal developer platforms can improve developer productivity by up to 40% by providing standardized tools, automated workflows, and self-service capabilities that reduce friction in the development and deployment process.

Sustainability and Green Cloud Computing

Environmental sustainability is becoming a critical consideration in cloud-native development, driving the adoption of green computing practices, energy-efficient architectures, and carbon-aware deployment strategies that minimize environmental impact while maintaining performance and scalability. Cloud-native applications can contribute to sustainability goals through intelligent resource optimization, dynamic scaling that reduces idle capacity, and deployment strategies that consider the carbon footprint of different cloud regions and infrastructure types. Organizations are implementing sustainability metrics alongside traditional performance indicators, using tools that provide visibility into energy consumption and carbon emissions associated with cloud-native applications.

Event-Driven Architecture and Reactive Systems

Event-driven architecture is becoming increasingly important for cloud-native applications, enabling reactive systems that respond to events in real-time while maintaining loose coupling between services and supporting asynchronous processing patterns. Modern event-driven systems leverage technologies such as Apache Kafka, Amazon EventBridge, and cloud-native messaging platforms to implement publish-subscribe patterns, event sourcing, and CQRS architectures that enhance scalability and resilience. These architectures enable organizations to build responsive applications that can handle high-throughput event streams, implement complex business workflows, and maintain consistency across distributed systems through event-driven state management.

- Event Streaming: Real-time processing of high-volume event streams for immediate response and data pipeline processing

- Choreography-Based Orchestration: Service coordination through events rather than centralized orchestration for improved resilience

- Event Sourcing: Storing application state as a sequence of events for auditability and temporal query capabilities

- CQRS Implementation: Separating read and write operations to optimize performance and scalability for different access patterns

- Reactive Microservices: Building services that react to events asynchronously while maintaining system responsiveness

GitOps and Infrastructure as Code

GitOps practices are becoming standard for cloud-native development, providing declarative configuration management through Git repositories that improve security, auditability, and operational consistency across development and production environments. Infrastructure as Code (IaC) tools enable organizations to define and manage cloud-native infrastructure through version-controlled code, ensuring reproducible deployments, environment consistency, and simplified disaster recovery processes. The combination of GitOps and IaC creates powerful workflows where infrastructure changes are reviewed, tested, and deployed through the same processes used for application code, enabling better collaboration between development and operations teams.

Future Challenges and Considerations

While cloud-native development offers significant benefits, organizations must address challenges including complexity management, skill requirements, security considerations, and cost optimization to successfully implement and maintain cloud-native applications at scale. The distributed nature of cloud-native applications introduces complexity in debugging, monitoring, and troubleshooting that requires sophisticated tooling and practices to manage effectively. Organizations must invest in training and development programs to build cloud-native expertise while implementing governance frameworks that balance developer productivity with security, compliance, and cost management requirements.

Implementation Considerations

Successful cloud-native adoption requires careful planning around team skills, organizational culture, technology choices, and gradual migration strategies that balance innovation with risk management and operational stability.

Industry-Specific Applications and Use Cases

Cloud-native development is transforming specific industries through tailored applications that address unique requirements, regulatory constraints, and operational challenges while leveraging the flexibility and scalability of cloud-native architectures. Financial services organizations implement cloud-native applications for real-time trading systems, fraud detection, and regulatory reporting that require high availability, low latency, and strict security controls. Healthcare systems leverage cloud-native architectures for electronic health records, medical imaging processing, and telemedicine platforms that must comply with privacy regulations while scaling to serve large patient populations.

Implementation Best Practices and Migration Strategies

Successful cloud-native implementation requires strategic planning that includes assessment of existing applications, identification of suitable migration patterns, and gradual adoption of cloud-native practices through proof-of-concept projects and incremental modernization efforts. Organizations should start with new applications or suitable existing services for cloud-native development while building internal capabilities, establishing best practices, and creating feedback loops for continuous improvement. Key implementation factors include choosing appropriate technology stacks, establishing governance frameworks, implementing security controls, and creating monitoring and observability strategies that support production workloads.

Conclusion

The future of cloud-native development represents a fundamental transformation in software engineering that goes far beyond simply moving applications to the cloud, encompassing new architectural patterns, development practices, and operational models that enable unprecedented scalability, resilience, and innovation. As organizations increasingly recognize that cloud-native development is not just a trend but an essential capability for remaining competitive in the digital economy, the adoption of microservices, containerization, serverless computing, and advanced orchestration technologies will become standard practices across industries. The convergence of emerging technologies including AI/ML integration, edge computing, advanced security frameworks, and sustainability initiatives will create new opportunities for organizations to build applications that are more intelligent, responsive, and environmentally conscious while maintaining the core benefits of cloud-native architecture. Organizations that embrace this evolution by investing in cloud-native technologies, building internal expertise, and implementing comprehensive strategies for migration and modernization will be best positioned to deliver exceptional user experiences, achieve operational excellence, and drive innovation in an increasingly cloud-first world where agility, scalability, and resilience are not just advantages but requirements for business success.

Reading Progress

0% completed

Article Insights

Share Article

Quick Actions

Stay Updated

Join 12k+ readers worldwide

Get the latest insights, tutorials, and industry news delivered straight to your inbox. No spam, just quality content.

Unsubscribe at any time. No spam, ever. 🚀