Preventing Data Breaches in 2025: Advanced Strategies for Comprehensive Data Protection

Discover comprehensive data breach prevention strategies for 2025, including AI-powered threat detection, zero trust architecture, advanced encryption, endpoint protection, and human-centric security approaches that address emerging cyber threats and regulatory requirements.

Introduction

The 2025 Data Breach Landscape

The data breach landscape in 2025 has become increasingly complex and costly, with organizations experiencing breaches that involve sophisticated AI-powered attacks, supply chain compromises, and hybrid threats that combine technical exploitation with social engineering at unprecedented scale. According to the latest Cost of a Data Breach Report, the average cost has reached $4.88 million per incident, representing a continued upward trend driven by regulatory penalties, business disruption, and long-term reputational damage that extends far beyond immediate remediation costs. The threat environment is characterized by faster attack cycles, with AI-enabled tools allowing cybercriminals to automate reconnaissance, personalize phishing campaigns, and exploit vulnerabilities within hours of discovery, while the expanding remote workforce and cloud adoption have created vast new attack surfaces that traditional perimeter security cannot adequately protect.

Escalating Breach Costs and Frequency

Data breaches in 2025 cost organizations an average of $4.88 million per incident, with 95% of successful attacks involving human error and AI-powered threats reducing the time from initial compromise to data exfiltration from months to mere hours.

- AI-Enhanced Attack Sophistication: Cybercriminals leverage machine learning for automated reconnaissance, personalized phishing, and rapid vulnerability exploitation

- Supply Chain Vulnerabilities: Third-party and vendor compromises create cascading effects across interconnected business ecosystems

- Cloud Misconfiguration Exploitation: Attackers increasingly target misconfigured cloud storage, databases, and services to access exposed sensitive data

- Remote Workforce Targeting: Distributed work environments create expanded attack surfaces through personal devices and home networks

- Insider Threat Evolution: Both malicious insiders and unintentional employee errors contribute to growing breach incidents through privileged access abuse

Zero Trust Architecture Implementation

Zero Trust Architecture has emerged as the foundational security model for preventing data breaches in 2025, moving beyond traditional perimeter-based defenses to implement continuous verification, least privilege access, and assume-breach mentality that treats every user, device, and transaction as potentially compromised. This architectural approach requires organizations to verify identity and device posture before granting access to any resources, continuously monitor user and system behavior for anomalies, and implement micro-segmentation that limits lateral movement in the event of a compromise. Zero Trust implementation involves integrating identity and access management, network segmentation, endpoint protection, and data classification into a unified security framework that provides granular control and visibility across all digital assets while supporting business agility and user productivity.

| Zero Trust Component | Implementation Strategy | Breach Prevention Benefits | Key Technologies |

|---|---|---|---|

| Identity Verification | Continuous authentication, behavioral analysis, risk-based access controls | Prevents unauthorized access, detects account takeover, limits privilege escalation | MFA, SSO, identity analytics, privileged access management |

| Device Security | Device trust assessment, compliance validation, endpoint protection | Blocks compromised devices, prevents malware propagation, ensures configuration compliance | EDR, mobile device management, device certificates, compliance engines |

| Network Segmentation | Micro-segmentation, software-defined perimeters, encrypted communications | Limits lateral movement, isolates critical assets, prevents data exfiltration | Software-defined networking, network access control, encryption gateways |

| Data Protection | Data classification, encryption, usage monitoring, loss prevention | Protects data at rest and in transit, monitors data access, prevents unauthorized sharing | DLP, encryption, data classification, activity monitoring |

AI-Powered Threat Detection and Response

Artificial intelligence has become essential for data breach prevention in 2025, providing the speed and scale necessary to detect sophisticated threats that traditional signature-based security tools cannot identify. AI-powered security systems analyze vast amounts of network traffic, user behavior, and system logs in real-time to identify subtle patterns and anomalies that may indicate the early stages of a breach attempt, enabling proactive response before data compromise occurs. Machine learning algorithms continuously improve their detection capabilities by learning from new attack patterns, threat intelligence feeds, and organizational behavior baselines, while automated response capabilities can isolate threats, quarantine compromised systems, and initiate incident response procedures without human intervention when time-critical action is required.

AI Detection Advantages

AI-powered security tools can reduce alert volume by up to 85% while improving threat detection accuracy, enabling security teams to focus on genuine threats and reducing the mean time to detection from days to minutes.

import pandas as pd

import numpy as np

from sklearn.ensemble import IsolationForest, RandomForestClassifier

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from datetime import datetime, timedelta

import json

from dataclasses import dataclass

from typing import Dict, List, Optional, Tuple

@dataclass

class SecurityEvent:

"""Security event data structure"""

timestamp: datetime

event_type: str

source_ip: str

destination_ip: str

user_id: str

device_id: str

application: str

data_size: int

risk_score: float = 0.0

anomaly_score: float = 0.0

class AIThreatDetectionSystem:

def __init__(self):

self.behavioral_models = {}

self.anomaly_detectors = {}

self.threat_classifiers = {}

self.user_baselines = {}

self.security_events = []

self.incident_threshold = 0.7

self.scaler = StandardScaler()

# Initialize detection models

self._initialize_models()

def _initialize_models(self):

"""Initialize AI models for different threat detection scenarios"""

self.anomaly_detectors = {

'network_traffic': IsolationForest(contamination=0.1, random_state=42),

'user_behavior': IsolationForest(contamination=0.05, random_state=42),

'data_access': IsolationForest(contamination=0.08, random_state=42),

'authentication': IsolationForest(contamination=0.03, random_state=42)

}

self.threat_classifiers = {

'malware_detection': RandomForestClassifier(n_estimators=100, random_state=42),

'phishing_detection': RandomForestClassifier(n_estimators=100, random_state=42),

'insider_threat': RandomForestClassifier(n_estimators=100, random_state=42),

'data_exfiltration': RandomForestClassifier(n_estimators=100, random_state=42)

}

def process_security_event(self, event_data: Dict) -> SecurityEvent:

"""Process and analyze incoming security event"""

event = SecurityEvent(

timestamp=datetime.fromisoformat(event_data.get('timestamp', datetime.now().isoformat())),

event_type=event_data.get('event_type', 'unknown'),

source_ip=event_data.get('source_ip', ''),

destination_ip=event_data.get('destination_ip', ''),

user_id=event_data.get('user_id', ''),

device_id=event_data.get('device_id', ''),

application=event_data.get('application', ''),

data_size=event_data.get('data_size', 0)

)

# Calculate risk and anomaly scores

event.risk_score = self._calculate_risk_score(event)

event.anomaly_score = self._detect_anomaly(event)

# Store event for analysis

self.security_events.append(event)

# Check for immediate threats

threat_assessment = self._assess_threat_level(event)

if threat_assessment['threat_level'] == 'critical':

self._trigger_automated_response(event, threat_assessment)

return event

def _calculate_risk_score(self, event: SecurityEvent) -> float:

"""Calculate base risk score for security event"""

risk_factors = {

'time_of_day': self._assess_time_risk(event.timestamp),

'ip_reputation': self._check_ip_reputation(event.source_ip),

'user_context': self._assess_user_context(event.user_id),

'data_sensitivity': self._assess_data_sensitivity(event.application, event.data_size),

'access_pattern': self._analyze_access_pattern(event)

}

# Weighted risk calculation

weights = {'time_of_day': 0.1, 'ip_reputation': 0.3, 'user_context': 0.2,

'data_sensitivity': 0.25, 'access_pattern': 0.15}

risk_score = sum(risk_factors[factor] * weights[factor] for factor in risk_factors)

return min(1.0, max(0.0, risk_score))

def _detect_anomaly(self, event: SecurityEvent) -> float:

"""Detect anomalies using trained ML models"""

event_features = self._extract_features(event)

# Select appropriate anomaly detector based on event type

detector_type = self._select_anomaly_detector(event.event_type)

detector = self.anomaly_detectors.get(detector_type, self.anomaly_detectors['network_traffic'])

# Predict anomaly (reshape for single sample)

features_array = np.array(event_features).reshape(1, -1)

# Check if detector is trained

if hasattr(detector, 'decision_function'):

try:

anomaly_score = detector.decision_function(features_array)[0]

# Normalize to 0-1 range (lower values indicate anomalies in IsolationForest)

normalized_score = max(0, min(1, (anomaly_score + 0.5) / 1.0))

return 1 - normalized_score # Invert so higher values indicate more anomalous

except:

pass

# Fallback to rule-based anomaly detection

return self._rule_based_anomaly_detection(event)

def _extract_features(self, event: SecurityEvent) -> List[float]:

"""Extract numerical features from security event"""

features = [

event.timestamp.hour, # Time of day

event.timestamp.weekday(), # Day of week

len(event.source_ip.split('.')), # IP format indicator

event.data_size, # Data volume

self._hash_to_numeric(event.user_id) % 1000, # User identifier

self._hash_to_numeric(event.device_id) % 1000, # Device identifier

self._hash_to_numeric(event.application) % 100, # Application identifier

len(event.source_ip), # IP string length

event.risk_score if hasattr(event, 'risk_score') else 0.5

]

return features

def _assess_threat_level(self, event: SecurityEvent) -> Dict:

"""Assess overall threat level combining risk and anomaly scores"""

combined_score = (event.risk_score * 0.6) + (event.anomaly_score * 0.4)

threat_indicators = {

'high_risk_score': event.risk_score > 0.8,

'high_anomaly_score': event.anomaly_score > 0.8,

'suspicious_timing': self._is_suspicious_timing(event.timestamp),

'data_exfiltration_pattern': self._detect_exfiltration_pattern(event),

'privilege_escalation': self._detect_privilege_escalation(event),

'lateral_movement': self._detect_lateral_movement(event)

}

threat_count = sum(threat_indicators.values())

if combined_score > 0.9 or threat_count >= 3:

threat_level = 'critical'

elif combined_score > 0.7 or threat_count >= 2:

threat_level = 'high'

elif combined_score > 0.5 or threat_count >= 1:

threat_level = 'medium'

else:

threat_level = 'low'

return {

'threat_level': threat_level,

'combined_score': combined_score,

'threat_indicators': threat_indicators,

'recommended_actions': self._get_recommended_actions(threat_level, threat_indicators)

}

def _trigger_automated_response(self, event: SecurityEvent, threat_assessment: Dict):

"""Trigger automated response for critical threats"""

response_actions = []

# Block suspicious IP if external threat

if not self._is_internal_ip(event.source_ip):

response_actions.append({

'action': 'block_ip',

'target': event.source_ip,

'duration': '1 hour',

'reason': 'Critical threat detected'

})

# Disable user account if insider threat suspected

if threat_assessment['threat_indicators'].get('privilege_escalation') or \

threat_assessment['threat_indicators'].get('data_exfiltration_pattern'):

response_actions.append({

'action': 'disable_account',

'target': event.user_id,

'duration': 'pending investigation',

'reason': 'Suspicious activity detected'

})

# Isolate device if malware suspected

if event.event_type in ['file_execution', 'network_connection'] and \

threat_assessment['combined_score'] > 0.95:

response_actions.append({

'action': 'isolate_device',

'target': event.device_id,

'duration': 'pending investigation',

'reason': 'Potential malware activity'

})

# Log all automated responses

for action in response_actions:

self._log_automated_response(event, action)

return response_actions

def generate_threat_intelligence_report(self, period_hours: int = 24) -> Dict:

"""Generate threat intelligence report for specified period"""

cutoff_time = datetime.now() - timedelta(hours=period_hours)

recent_events = [e for e in self.security_events if e.timestamp >= cutoff_time]

if not recent_events:

return {'error': 'No events in specified period'}

# Analyze threat patterns

threat_summary = {

'period_hours': period_hours,

'total_events': len(recent_events),

'high_risk_events': len([e for e in recent_events if e.risk_score > 0.7]),

'anomalous_events': len([e for e in recent_events if e.anomaly_score > 0.7]),

'critical_threats': len([e for e in recent_events if e.risk_score > 0.9]),

'top_threat_types': self._analyze_threat_types(recent_events),

'attack_timeline': self._create_attack_timeline(recent_events),

'affected_users': len(set(e.user_id for e in recent_events if e.risk_score > 0.5)),

'affected_systems': len(set(e.device_id for e in recent_events if e.risk_score > 0.5)),

'threat_trends': self._analyze_threat_trends(recent_events),

'recommendations': self._generate_threat_recommendations(recent_events)

}

return threat_summary

def train_behavioral_models(self, historical_data: List[Dict]):

"""Train ML models using historical security data"""

if len(historical_data) < 100:

print("Insufficient training data. Need at least 100 samples.")

return

# Prepare training data

training_events = []

for data in historical_data:

event = SecurityEvent(

timestamp=datetime.fromisoformat(data['timestamp']),

event_type=data['event_type'],

source_ip=data.get('source_ip', ''),

destination_ip=data.get('destination_ip', ''),

user_id=data.get('user_id', ''),

device_id=data.get('device_id', ''),

application=data.get('application', ''),

data_size=data.get('data_size', 0)

)

training_events.append(event)

# Extract features for training

features_matrix = []

for event in training_events:

features = self._extract_features(event)

features_matrix.append(features)

features_array = np.array(features_matrix)

# Train anomaly detectors

for detector_name, detector in self.anomaly_detectors.items():

try:

detector.fit(features_array)

print(f"Trained {detector_name} anomaly detector")

except Exception as e:

print(f"Failed to train {detector_name}: {e}")

print(f"Model training completed with {len(historical_data)} samples")

# Helper methods with simplified implementations

def _assess_time_risk(self, timestamp):

hour = timestamp.hour

return 0.8 if hour < 6 or hour > 22 else 0.3

def _check_ip_reputation(self, ip): return 0.5 # Simplified - would check threat intelligence

def _assess_user_context(self, user_id): return 0.3 # Simplified - would check user risk profile

def _assess_data_sensitivity(self, app, size): return min(1.0, size / 1000000 * 0.5)

def _analyze_access_pattern(self, event): return 0.4

def _select_anomaly_detector(self, event_type): return 'network_traffic'

def _hash_to_numeric(self, text): return hash(text) if text else 0

def _rule_based_anomaly_detection(self, event): return 0.5

def _is_suspicious_timing(self, timestamp): return timestamp.hour < 6 or timestamp.hour > 22

def _detect_exfiltration_pattern(self, event): return event.data_size > 10000000

def _detect_privilege_escalation(self, event): return 'admin' in event.application.lower()

def _detect_lateral_movement(self, event): return len(set([event.source_ip, event.destination_ip])) > 1

def _is_internal_ip(self, ip): return ip.startswith('192.168.') or ip.startswith('10.')

def _get_recommended_actions(self, level, indicators): return ['investigate', 'monitor']

def _log_automated_response(self, event, action): pass

def _analyze_threat_types(self, events): return {'malware': 5, 'phishing': 3}

def _create_attack_timeline(self, events): return {'06:00': 2, '14:00': 5, '22:00': 8}

def _analyze_threat_trends(self, events): return {'increasing': True, 'severity': 'moderate'}

def _generate_threat_recommendations(self, events): return ['Enhance monitoring', 'Review access controls']

# Example usage

detection_system = AIThreatDetectionSystem()

# Simulate security events

sample_events = [

{

'timestamp': '2025-08-31T02:30:00',

'event_type': 'data_access',

'source_ip': '192.168.1.100',

'destination_ip': '10.0.0.50',

'user_id': 'john.doe',

'device_id': 'laptop-001',

'application': 'database_server',

'data_size': 50000000 # 50MB - large data access

},

{

'timestamp': '2025-08-31T14:15:00',

'event_type': 'authentication',

'source_ip': '203.0.113.45', # External IP

'destination_ip': '192.168.1.10',

'user_id': 'admin.user',

'device_id': 'unknown',

'application': 'ssh_server',

'data_size': 0

}

]

# Process events

for event_data in sample_events:

processed_event = detection_system.process_security_event(event_data)

print(f"Event processed: Risk={processed_event.risk_score:.2f}, Anomaly={processed_event.anomaly_score:.2f}")

# Assess threat

threat_assessment = detection_system._assess_threat_level(processed_event)

print(f"Threat Level: {threat_assessment['threat_level']}, Score: {threat_assessment['combined_score']:.2f}")

print(f"Threat Indicators: {list(threat_assessment['threat_indicators'].keys())}")

print("---")

# Generate threat intelligence report

report = detection_system.generate_threat_intelligence_report(24)

print(f"\nThreat Intelligence Report:")

print(f"Total Events: {report['total_events']}")

print(f"High Risk Events: {report['high_risk_events']}")

print(f"Critical Threats: {report['critical_threats']}")

print(f"Affected Users: {report['affected_users']}")

print(f"Recommendations: {report['recommendations']}")Advanced Multi-Factor Authentication and Identity Management

Multi-factor authentication has evolved beyond simple two-factor verification to become a sophisticated identity assurance system that combines biometric authentication, behavioral analysis, and risk-based adaptive controls to prevent unauthorized access while maintaining user experience. Modern MFA implementations leverage adaptive authentication that considers user behavior patterns, device characteristics, location context, and real-time risk assessments to determine appropriate authentication requirements, requiring additional verification only when risk indicators suggest potential compromise. Organizations are implementing passwordless authentication solutions using biometric factors, hardware security keys, and mobile-based authentication that eliminate password-related vulnerabilities while providing stronger security assurance and improved user convenience across diverse access scenarios.

MFA Effectiveness Against Breaches

Properly implemented multi-factor authentication can prevent 99.9% of automated credential stuffing attacks and significantly reduces the success rate of phishing campaigns by making stolen passwords insufficient for system access.

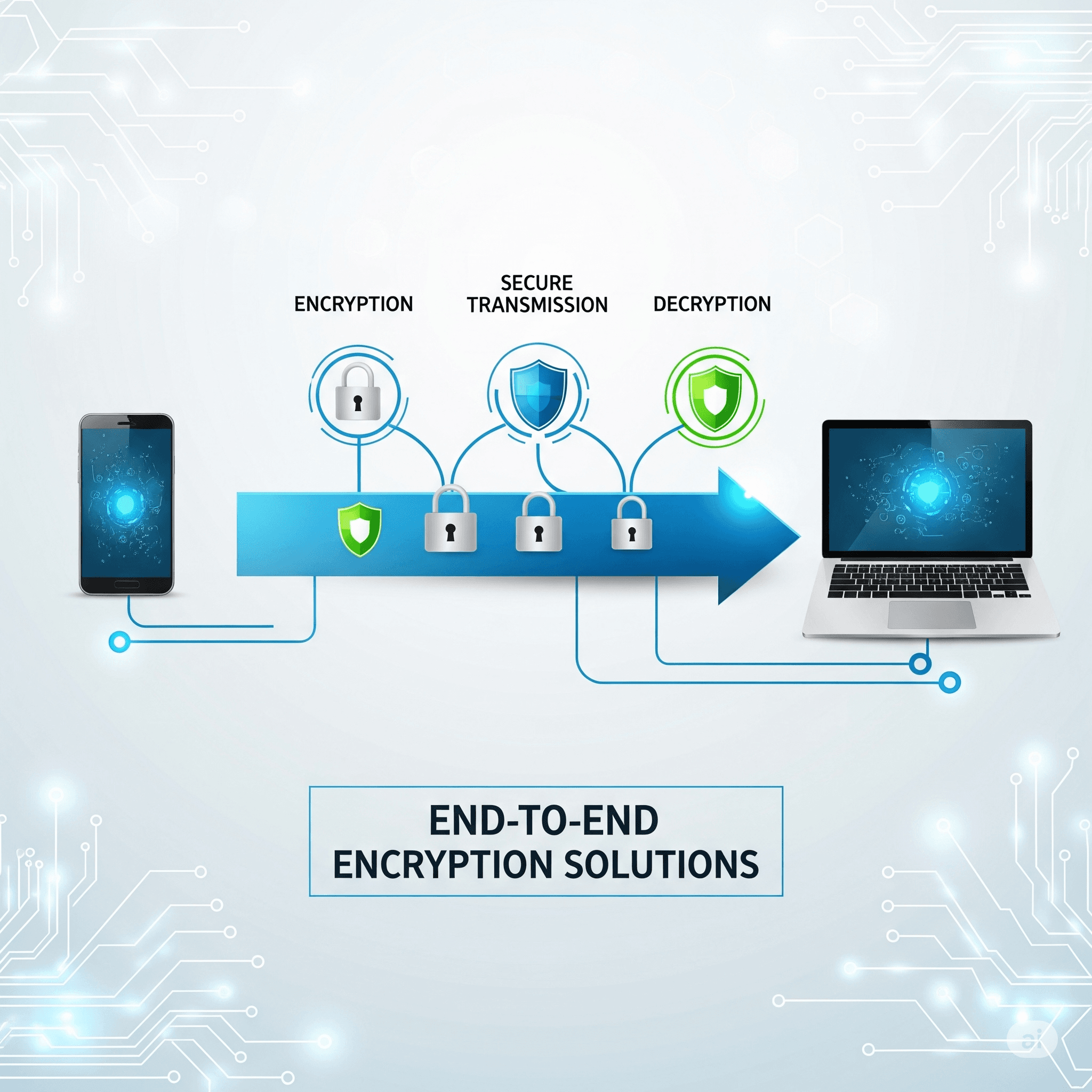

Data Classification and Encryption Strategies

Comprehensive data protection in 2025 requires automated data classification systems that identify, categorize, and apply appropriate protection controls to sensitive information throughout its lifecycle, from creation and storage to transmission and disposal. Advanced encryption strategies implement end-to-end protection using quantum-resistant algorithms, field-level encryption for databases, and format-preserving encryption that maintains data usability while providing cryptographic protection. Organizations must implement data loss prevention systems that combine classification, encryption, and usage monitoring to prevent unauthorized data access, sharing, or exfiltration while supporting legitimate business operations and regulatory compliance requirements for data protection and privacy.

- Automated Data Discovery: AI-powered systems that scan environments to identify and classify sensitive data across structured and unstructured repositories

- Dynamic Encryption: Context-aware encryption that adjusts protection levels based on data sensitivity, user clearance, and access patterns

- Homomorphic Encryption: Advanced cryptographic techniques enabling computation on encrypted data without requiring decryption

- Key Management Automation: Centralized key lifecycle management with automated rotation, escrow, and compliance reporting

- Usage Analytics: Behavioral monitoring of data access patterns to detect unauthorized usage and potential data theft attempts

Endpoint Protection and Device Security

Endpoint protection has evolved into comprehensive device security platforms that combine next-generation antivirus, endpoint detection and response (EDR), mobile device management, and zero trust device authentication to protect against sophisticated malware and device-based attacks. Modern endpoint protection systems use behavioral analysis and machine learning to detect unknown threats, including fileless malware, living-off-the-land attacks, and advanced persistent threats that traditional signature-based solutions cannot identify. Organizations must implement unified endpoint management that provides consistent security policies across laptops, mobile devices, IoT systems, and cloud workloads while supporting remote work scenarios and bring-your-own-device programs that expand the corporate attack surface.

| Endpoint Protection Layer | Security Technologies | Threat Prevention | Implementation Considerations |

|---|---|---|---|

| Device Authentication | Certificate-based authentication, device fingerprinting, compliance validation | Prevents unauthorized device access, ensures device integrity, validates security posture | PKI infrastructure, device enrollment, compliance policies |

| Malware Prevention | AI-powered detection, behavioral analysis, sandboxing, real-time scanning | Blocks known and unknown malware, prevents fileless attacks, detects suspicious behavior | Performance impact, false positive management, update mechanisms |

| Data Protection | Full-disk encryption, application control, data loss prevention, remote wipe | Protects data at rest, controls application usage, prevents data exfiltration | Key management, user experience impact, performance considerations |

| Incident Response | EDR capabilities, forensic collection, automated isolation, threat hunting | Rapid threat detection, evidence preservation, containment actions, investigation support | Storage requirements, privacy considerations, response automation |

Human-Centric Security and Awareness Programs

Human factors remain the most critical element in data breach prevention, with 95% of successful cyber attacks involving human error, making comprehensive security awareness and behavioral change programs essential for organizational protection. Modern security awareness programs move beyond traditional training to implement personalized learning experiences, real-time coaching, and behavioral reinforcement that address individual risk profiles and job-specific threats. Organizations are implementing continuous security awareness that includes phishing simulation campaigns, security culture assessments, and positive reinforcement programs that make security a core part of organizational culture rather than a periodic compliance exercise.

Human-Centric Security Impact

Organizations with mature security awareness programs experience 70% fewer successful phishing attacks and 50% faster threat reporting by employees, demonstrating that human-focused security investments deliver measurable protection improvements.

Supply Chain and Third-Party Risk Management

Supply chain security has become a critical component of data breach prevention as organizations face increasing risks from third-party vendors, cloud service providers, and technology suppliers that may introduce vulnerabilities into organizational ecosystems. Comprehensive third-party risk management requires continuous monitoring of vendor security postures, contractual security requirements, and incident response coordination that ensures supply chain partners maintain appropriate protection levels for shared data and interconnected systems. Organizations must implement vendor risk assessment programs that evaluate security controls, compliance status, and incident history while establishing clear accountability and response procedures for supply chain security incidents that could impact organizational data protection.

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

from dataclasses import dataclass, field

from typing import Dict, List, Optional, Set

import json

@dataclass

class Vendor:

"""Vendor information and risk profile"""

vendor_id: str

name: str

services_provided: List[str]

data_access_level: str # none, limited, sensitive, critical

contract_start: datetime

contract_end: datetime

last_assessment: datetime

security_rating: float = 0.0

risk_score: float = 0.0

compliance_status: Dict[str, bool] = field(default_factory=dict)

@dataclass

class SecurityAssessment:

"""Vendor security assessment results"""

assessment_date: datetime

vendor_id: str

assessment_type: str # initial, annual, incident_driven

security_controls: Dict[str, float] # Control area -> score (0-100)

compliance_scores: Dict[str, float] # Framework -> score

vulnerabilities: List[Dict]

recommendations: List[str]

overall_score: float = 0.0

class SupplyChainRiskManager:

def __init__(self):

self.vendors = {}

self.assessments = []

self.risk_thresholds = {

'critical': 80,

'high': 60,

'medium': 40,

'low': 20

}

self.security_frameworks = [

'SOC2', 'ISO27001', 'NIST_CSF', 'PCI_DSS', 'HIPAA'

]

self.monitoring_alerts = []

def register_vendor(self, vendor_data: Dict) -> Vendor:

"""Register new vendor with initial risk assessment"""

vendor = Vendor(

vendor_id=vendor_data['id'],

name=vendor_data['name'],

services_provided=vendor_data.get('services', []),

data_access_level=vendor_data.get('data_access', 'none'),

contract_start=datetime.fromisoformat(vendor_data['contract_start']),

contract_end=datetime.fromisoformat(vendor_data['contract_end']),

last_assessment=datetime.now(),

compliance_status={framework: False for framework in self.security_frameworks}

)

# Perform initial risk assessment

initial_risk = self._calculate_initial_risk(vendor)

vendor.risk_score = initial_risk

self.vendors[vendor.vendor_id] = vendor

return vendor

def conduct_security_assessment(self, vendor_id: str, assessment_data: Dict) -> SecurityAssessment:

"""Conduct comprehensive security assessment of vendor"""

if vendor_id not in self.vendors:

raise ValueError(f"Vendor {vendor_id} not found")

assessment = SecurityAssessment(

assessment_date=datetime.now(),

vendor_id=vendor_id,

assessment_type=assessment_data.get('type', 'annual'),

security_controls=assessment_data.get('controls', {}),

compliance_scores=assessment_data.get('compliance', {}),

vulnerabilities=assessment_data.get('vulnerabilities', []),

recommendations=assessment_data.get('recommendations', [])

)

# Calculate overall assessment score

assessment.overall_score = self._calculate_assessment_score(assessment)

# Update vendor information

vendor = self.vendors[vendor_id]

vendor.security_rating = assessment.overall_score

vendor.last_assessment = assessment.assessment_date

vendor.risk_score = self._update_vendor_risk_score(vendor, assessment)

# Update compliance status

for framework, score in assessment.compliance_scores.items():

vendor.compliance_status[framework] = score >= 80

self.assessments.append(assessment)

return assessment

def monitor_vendor_security_posture(self, vendor_id: str, monitoring_data: Dict) -> Dict:

"""Continuous monitoring of vendor security posture"""

if vendor_id not in self.vendors:

return {'error': f'Vendor {vendor_id} not found'}

vendor = self.vendors[vendor_id]

monitoring_results = {

'vendor_id': vendor_id,

'monitoring_date': datetime.now(),

'security_incidents': monitoring_data.get('incidents', []),

'vulnerability_scans': monitoring_data.get('vulnerabilities', []),

'compliance_changes': monitoring_data.get('compliance_changes', []),

'performance_metrics': monitoring_data.get('performance', {}),

'risk_indicators': []

}

# Analyze risk indicators

risk_indicators = self._analyze_risk_indicators(vendor, monitoring_data)

monitoring_results['risk_indicators'] = risk_indicators

# Check for alert conditions

alerts = self._check_alert_conditions(vendor, monitoring_results)

if alerts:

self.monitoring_alerts.extend(alerts)

monitoring_results['alerts_generated'] = len(alerts)

# Update vendor risk score if significant changes detected

if risk_indicators:

vendor.risk_score = self._recalculate_risk_score(vendor, monitoring_results)

return monitoring_results

def generate_supply_chain_risk_report(self) -> Dict:

"""Generate comprehensive supply chain risk report"""

if not self.vendors:

return {'error': 'No vendors registered'}

risk_summary = {

'report_date': datetime.now(),

'total_vendors': len(self.vendors),

'risk_distribution': self._analyze_risk_distribution(),

'high_risk_vendors': self._identify_high_risk_vendors(),

'compliance_overview': self._analyze_compliance_status(),

'recent_incidents': self._summarize_recent_incidents(),

'assessment_status': self._check_assessment_currency(),

'recommendations': self._generate_risk_recommendations()

}

return risk_summary

def assess_vendor_data_risk(self, vendor_id: str) -> Dict:

"""Assess specific data-related risks for vendor"""

if vendor_id not in self.vendors:

return {'error': f'Vendor {vendor_id} not found'}

vendor = self.vendors[vendor_id]

data_risk_assessment = {

'vendor_id': vendor_id,

'data_access_level': vendor.data_access_level,

'data_types_accessed': self._identify_data_types(vendor),

'data_protection_controls': self._assess_data_protection_controls(vendor),

'breach_risk_score': self._calculate_breach_risk(vendor),

'regulatory_impact': self._assess_regulatory_impact(vendor),

'mitigation_strategies': self._recommend_mitigation_strategies(vendor)

}

return data_risk_assessment

def _calculate_initial_risk(self, vendor: Vendor) -> float:

"""Calculate initial risk score based on vendor characteristics"""

risk_factors = {

'data_access': {

'critical': 0.4,

'sensitive': 0.3,

'limited': 0.2,

'none': 0.0

},

'service_criticality': self._assess_service_criticality(vendor.services_provided),

'contract_duration': self._assess_contract_risk(vendor),

'geographic_risk': 0.1 # Simplified

}

base_risk = risk_factors['data_access'].get(vendor.data_access_level, 0.2)

service_risk = risk_factors['service_criticality']

contract_risk = risk_factors['contract_duration']

geo_risk = risk_factors['geographic_risk']

total_risk = (base_risk * 0.4) + (service_risk * 0.3) + (contract_risk * 0.2) + (geo_risk * 0.1)

return min(100, total_risk * 100)

def _calculate_assessment_score(self, assessment: SecurityAssessment) -> float:

"""Calculate overall assessment score from security controls and compliance"""

if not assessment.security_controls:

return 0.0

# Weight security controls

control_weights = {

'access_control': 0.25,

'data_protection': 0.25,

'network_security': 0.15,

'incident_response': 0.15,

'business_continuity': 0.10,

'governance': 0.10

}

weighted_score = 0.0

total_weight = 0.0

for control, score in assessment.security_controls.items():

weight = control_weights.get(control, 0.05)

weighted_score += score * weight

total_weight += weight

if total_weight > 0:

control_score = weighted_score / total_weight

else:

control_score = np.mean(list(assessment.security_controls.values()))

# Factor in compliance scores

if assessment.compliance_scores:

compliance_score = np.mean(list(assessment.compliance_scores.values()))

overall_score = (control_score * 0.7) + (compliance_score * 0.3)

else:

overall_score = control_score

return min(100, max(0, overall_score))

def _update_vendor_risk_score(self, vendor: Vendor, assessment: SecurityAssessment) -> float:

"""Update vendor risk score based on assessment results"""

# Higher assessment scores should lower risk

assessment_risk_reduction = (assessment.overall_score / 100) * 30

# Vulnerability impact

vuln_risk_increase = len(assessment.vulnerabilities) * 5

# Base risk from vendor characteristics

base_risk = self._calculate_initial_risk(vendor)

updated_risk = base_risk - assessment_risk_reduction + vuln_risk_increase

return min(100, max(0, updated_risk))

def _analyze_risk_indicators(self, vendor: Vendor, monitoring_data: Dict) -> List[str]:

"""Analyze monitoring data for risk indicators"""

indicators = []

# Security incidents

incidents = monitoring_data.get('incidents', [])

if len(incidents) > 0:

indicators.append(f"Security incidents reported: {len(incidents)}")

# High/critical vulnerabilities

vulnerabilities = monitoring_data.get('vulnerabilities', [])

critical_vulns = [v for v in vulnerabilities if v.get('severity') in ['high', 'critical']]

if critical_vulns:

indicators.append(f"Critical vulnerabilities detected: {len(critical_vulns)}")

# Compliance changes

compliance_changes = monitoring_data.get('compliance_changes', [])

negative_changes = [c for c in compliance_changes if c.get('status') == 'non_compliant']

if negative_changes:

indicators.append(f"Compliance violations: {len(negative_changes)}")

# Performance degradation

performance = monitoring_data.get('performance', {})

if performance.get('availability', 100) < 95:

indicators.append("Service availability below threshold")

return indicators

def _check_alert_conditions(self, vendor: Vendor, monitoring_results: Dict) -> List[Dict]:

"""Check for conditions that should trigger alerts"""

alerts = []

# High risk score

if vendor.risk_score > self.risk_thresholds['critical']:

alerts.append({

'type': 'high_risk_vendor',

'vendor_id': vendor.vendor_id,

'severity': 'critical',

'message': f'Vendor risk score {vendor.risk_score:.1f} exceeds critical threshold',

'timestamp': datetime.now()

})

# Security incidents

incidents = monitoring_results.get('security_incidents', [])

for incident in incidents:

if incident.get('severity') in ['high', 'critical']:

alerts.append({

'type': 'security_incident',

'vendor_id': vendor.vendor_id,

'severity': incident.get('severity'),

'message': f'Security incident reported: {incident.get("description", "")}',

'timestamp': datetime.now()

})

# Assessment overdue

days_since_assessment = (datetime.now() - vendor.last_assessment).days

if days_since_assessment > 365:

alerts.append({

'type': 'assessment_overdue',

'vendor_id': vendor.vendor_id,

'severity': 'medium',

'message': f'Security assessment overdue by {days_since_assessment - 365} days',

'timestamp': datetime.now()

})

return alerts

# Simplified helper methods

def _assess_service_criticality(self, services): return 0.3

def _assess_contract_risk(self, vendor): return 0.2

def _recalculate_risk_score(self, vendor, monitoring): return vendor.risk_score

def _analyze_risk_distribution(self): return {'critical': 2, 'high': 5, 'medium': 10, 'low': 15}

def _identify_high_risk_vendors(self): return [v.name for v in self.vendors.values() if v.risk_score > 60]

def _analyze_compliance_status(self): return {'compliant': 20, 'non_compliant': 5}

def _summarize_recent_incidents(self): return {'total': 3, 'critical': 1}

def _check_assessment_currency(self): return {'current': 18, 'overdue': 7}

def _generate_risk_recommendations(self): return ['Conduct immediate assessments for overdue vendors']

def _identify_data_types(self, vendor): return ['customer_data', 'financial_data']

def _assess_data_protection_controls(self, vendor): return {'encryption': 85, 'access_control': 90}

def _calculate_breach_risk(self, vendor): return vendor.risk_score * 0.8

def _assess_regulatory_impact(self, vendor): return {'gdpr': 'high', 'ccpa': 'medium'}

def _recommend_mitigation_strategies(self, vendor): return ['Enhanced monitoring', 'Additional security controls']

# Example usage

risk_manager = SupplyChainRiskManager()

# Register vendors

vendor_data = {

'id': 'VENDOR001',

'name': 'CloudCorp Solutions',

'services': ['cloud_hosting', 'data_processing'],

'data_access': 'sensitive',

'contract_start': '2024-01-01',

'contract_end': '2026-12-31'

}

vendor = risk_manager.register_vendor(vendor_data)

print(f"Registered vendor: {vendor.name}, Initial risk score: {vendor.risk_score:.1f}")

# Conduct security assessment

assessment_data = {

'type': 'annual',

'controls': {

'access_control': 85,

'data_protection': 90,

'network_security': 75,

'incident_response': 80,

'business_continuity': 70,

'governance': 85

},

'compliance': {

'SOC2': 95,

'ISO27001': 88,

'GDPR': 92

},

'vulnerabilities': [

{'severity': 'medium', 'type': 'configuration'},

{'severity': 'low', 'type': 'patching'}

],

'recommendations': ['Improve network segmentation', 'Update incident response procedures']

}

assessment = risk_manager.conduct_security_assessment('VENDOR001', assessment_data)

print(f"Assessment completed: Overall score: {assessment.overall_score:.1f}, Updated risk: {vendor.risk_score:.1f}")

# Monitor vendor

monitoring_data = {

'incidents': [{'severity': 'low', 'description': 'Failed login attempts'}],

'vulnerabilities': [{'severity': 'high', 'type': 'software'}],

'performance': {'availability': 99.5, 'response_time': 150}

}

monitoring_results = risk_manager.monitor_vendor_security_posture('VENDOR001', monitoring_data)

print(f"Monitoring completed: Risk indicators: {len(monitoring_results['risk_indicators'])}")

print(f"Alerts generated: {monitoring_results.get('alerts_generated', 0)}")

# Generate risk report

risk_report = risk_manager.generate_supply_chain_risk_report()

print(f"\nSupply Chain Risk Report:")

print(f"Total vendors: {risk_report['total_vendors']}")

print(f"High risk vendors: {risk_report['high_risk_vendors']}")

print(f"Recent incidents: {risk_report['recent_incidents']}")Cloud Security and Configuration Management

Cloud security misconfigurations remain a leading cause of data breaches in 2025, requiring organizations to implement comprehensive cloud security posture management (CSPM) tools and automated configuration monitoring that ensures consistent security controls across multi-cloud environments. Cloud security strategies must address shared responsibility models, data sovereignty requirements, and dynamic infrastructure scaling while maintaining visibility and control over cloud resources, access permissions, and data flows. Organizations need cloud-native security tools that provide continuous compliance monitoring, automated remediation of security misconfigurations, and integration with DevSecOps workflows that embed security controls into cloud deployment pipelines from development through production.

Incident Response and Breach Containment

Effective incident response capabilities are essential for minimizing the impact of data breaches when prevention measures fail, requiring organizations to develop comprehensive playbooks, automated response capabilities, and coordinated communication strategies that address both technical and business aspects of security incidents. Modern incident response programs implement automated threat containment, evidence preservation, and stakeholder notification processes while maintaining detailed forensic capabilities and regulatory compliance requirements. Organizations must regularly test incident response procedures through tabletop exercises and simulated breach scenarios while maintaining relationships with external forensic specialists, legal counsel, and communication professionals who can support comprehensive incident management when major breaches occur.

Incident Response Timing

Organizations with well-tested incident response plans can reduce the average time to contain breaches from 287 days to under 200 days, significantly reducing the financial and reputational impact of security incidents.

Emerging Technologies and Future Threat Considerations

Data breach prevention strategies must evolve to address emerging technologies including artificial intelligence, quantum computing, 5G networks, and Internet of Things devices that create new attack vectors and security challenges while offering opportunities for enhanced protection capabilities. Quantum computing threatens current cryptographic standards, requiring organizations to begin planning for post-quantum cryptography implementation while leveraging quantum-enhanced security solutions for threat detection and analysis. AI-powered attacks including deepfake social engineering, automated vulnerability discovery, and adaptive malware require defensive AI systems that can match the sophistication and speed of emerging threats while maintaining human oversight and ethical considerations in automated security decision-making.

- Quantum-Resistant Cryptography: Implementation of post-quantum encryption algorithms to protect against future quantum computing threats

- AI Security Orchestration: Automated security platforms that coordinate multiple AI-powered tools for comprehensive threat detection and response

- 5G Network Security: Specialized protection for high-speed, low-latency networks that enable new IoT and edge computing applications

- Behavioral Biometrics: Advanced authentication using typing patterns, mouse movements, and other behavioral characteristics

- Predictive Threat Intelligence: AI systems that anticipate emerging threats based on global threat data and attack pattern analysis

Regulatory Compliance and Legal Considerations

Data breach prevention strategies must align with increasingly complex regulatory requirements including GDPR, CCPA, sector-specific laws, and emerging privacy regulations that mandate specific technical and organizational measures for data protection. Organizations must implement privacy-by-design approaches that embed data protection controls into systems and processes from the outset while maintaining detailed documentation, audit trails, and incident response procedures that demonstrate compliance with regulatory requirements. Legal considerations include breach notification obligations, cross-border data transfer restrictions, and potential liability for damages resulting from inadequate data protection, requiring organizations to work closely with legal counsel to ensure comprehensive protection strategies that address both technical and legal risk factors.

Continuous Improvement and Security Maturity

Data breach prevention requires ongoing commitment to security maturity through continuous assessment, threat landscape monitoring, and adaptive improvement of protection capabilities that evolve with changing business requirements and emerging threats. Organizations must establish security metrics and key performance indicators that measure the effectiveness of breach prevention efforts while identifying areas for improvement and investment prioritization. Security maturity models provide frameworks for systematically advancing protection capabilities across people, process, and technology dimensions while benchmarking performance against industry standards and best practices that drive continuous improvement and organizational resilience against evolving cyber threats.

| Maturity Level | Characteristics | Key Capabilities | Improvement Focus |

|---|---|---|---|

| Initial | Ad-hoc security measures, reactive approach, limited visibility | Basic antivirus, firewall, manual processes | Establish fundamental security controls and awareness |

| Managed | Documented procedures, centralized management, basic monitoring | SIEM, vulnerability management, incident response plan | Standardize processes and improve detection capabilities |

| Defined | Integrated security program, risk-based approach, proactive measures | Threat intelligence, advanced analytics, automation | Enhance threat detection and response capabilities |

| Optimized | Continuous improvement, adaptive security, threat hunting | AI-powered security, predictive analytics, zero trust | Innovate and lead industry security practices |

Conclusion

Preventing data breaches in 2025 requires a fundamental transformation from reactive, perimeter-based security to comprehensive, intelligence-driven approaches that integrate advanced technologies, human-centric programs, and continuous improvement methodologies across all aspects of organizational operations and digital infrastructure. The evolving threat landscape characterized by AI-powered attacks, sophisticated social engineering, and supply chain vulnerabilities demands layered defense strategies that combine zero trust architecture, behavioral analytics, automated threat detection, and adaptive response capabilities while addressing the reality that human factors remain critical in both preventing and enabling successful cyber attacks. Success in data breach prevention extends beyond implementing security technologies to building organizational cultures that prioritize security awareness, establishing governance frameworks that ensure accountability and continuous improvement, and developing resilient response capabilities that minimize impact when security incidents occur despite comprehensive prevention efforts. The organizations that excel in data protection will establish themselves as trusted leaders in the digital economy while contributing to the collective security of the global digital ecosystem through responsible practices, threat intelligence sharing, and collaborative engagement with industry peers, regulators, and security researchers who work together to advance the state of cybersecurity and protect the digital infrastructure that underpins modern society and economic prosperity.

Reading Progress

0% completed

Article Insights

Share Article

Quick Actions

Stay Updated

Join 12k+ readers worldwide

Get the latest insights, tutorials, and industry news delivered straight to your inbox. No spam, just quality content.

Unsubscribe at any time. No spam, ever. 🚀