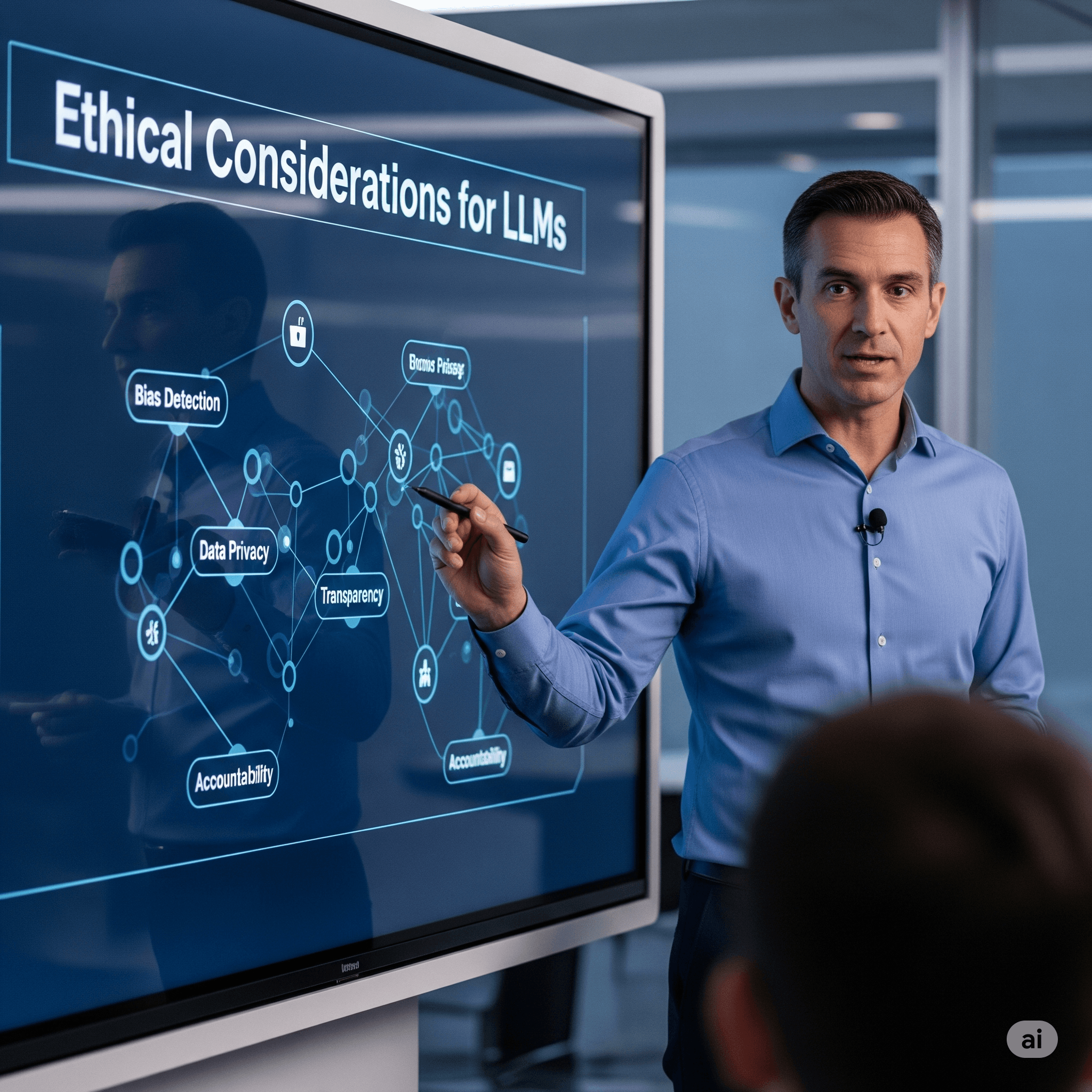

Ethical Considerations for LLMs

Navigate the complex ethical landscape of large language models (LLMs) including bias mitigation, transparency requirements, privacy protection, accountability frameworks, and responsible AI governance for sustainable innovation.

Introduction

Core Ethical Challenges in LLM Development

The fundamental ethical challenges in LLM development encompass bias and fairness concerns where biased patterns from training data result in discriminatory outputs that disproportionately affect particular demographic groups, privacy and data protection issues arising from massive datasets that may include personal information without explicit consent, and transparency deficits that make it difficult to understand how models make decisions. Additional critical challenges include accountability questions about who bears responsibility for AI-generated content and decisions, the environmental impact of training computationally intensive models, and the risk of generating harmful content including misinformation, hate speech, or content that could be used for malicious purposes. These interconnected challenges require comprehensive approaches that address technical, social, legal, and ethical dimensions simultaneously.

Critical Ethical Imperatives

Fairness, accountability, transparency, privacy, and bias mitigation represent the main ethical issues in LLM development that require proactive attention rather than reactive solutions.

- Bias and Discrimination: Systematic unfairness in model outputs that perpetuates or amplifies existing societal biases and inequalities

- Privacy Violations: Potential exposure of personal information contained in training data through model outputs or inference attacks

- Lack of Transparency: Limited understanding of model decision-making processes that undermines accountability and trust

- Accountability Gaps: Unclear responsibility assignment for AI-generated content, decisions, and their consequences

- Environmental Impact: Significant carbon footprint and resource consumption associated with training and operating large models

Understanding and Addressing LLM Bias

LLM bias manifests as systematic unfairness in model outputs resulting from biased patterns in training data or model architecture, often reflecting imbalances present in datasets where specific demographic groups may be underrepresented or misrepresented. These biases can manifest in various forms including gender bias where models associate certain professions or characteristics with specific genders, racial bias that perpetuates stereotypes about different ethnic groups, cultural bias that favors dominant cultural perspectives, and socioeconomic bias that reflects class-based assumptions. Addressing bias requires multi-faceted approaches including diverse and representative training datasets, fairness-aware evaluation metrics that assess model performance across different demographic groups, active debiasing techniques such as post-processing methods, and ongoing monitoring to detect and correct biased outputs in deployed systems.

| Bias Type | Manifestation | Impact | Mitigation Strategies |

|---|---|---|---|

| Gender Bias | Associating professions or traits with specific genders | Reinforces stereotypes, limits opportunities | Balanced datasets, gender-neutral language, bias testing |

| Racial/Ethnic Bias | Stereotypical associations with racial or ethnic groups | Perpetuates discrimination, social harm | Representative training data, cultural sensitivity reviews |

| Socioeconomic Bias | Assumptions about class, education, or economic status | Excludes marginalized communities, widens inequality | Inclusive datasets, multi-perspective validation |

| Cultural Bias | Favoring dominant cultural perspectives and values | Marginalizes minority cultures, reduces diversity | Cross-cultural training data, diverse development teams |

Privacy and Data Protection Challenges

Privacy concerns in LLMs arise from their training on massive datasets scraped from the internet, which may include personal information, copyrighted material, or sensitive data collected without explicit consent, creating risks of unintended privacy breaches when models regenerate or infer personal information. The scale of data collection for LLM training raises fundamental questions about digital privacy rights and consent in the age of AI, particularly when personal information from social media posts, forums, or other online sources is incorporated into training datasets without individuals' knowledge or permission. Privacy protection strategies include implementing robust data anonymization techniques, establishing clear data usage guidelines, adopting privacy-preserving training methods such as differential privacy, and developing technical safeguards to prevent models from memorizing or reproducing sensitive personal information.

Privacy Protection Imperative

AI developers must prioritize responsible data collection practices, implement robust anonymization techniques, and establish clear guidelines for data usage while balancing innovation with individual privacy rights.

Transparency and Explainability Requirements

Transparency and explainability represent foundational pillars in ethical LLM deployment, requiring developers to provide clear information about model capabilities, limitations, training processes, and decision-making mechanisms to enable informed usage and accountability. The complexity of LLMs creates inherent challenges for explainability, as these models operate through intricate neural networks with billions of parameters that make it difficult to trace how specific inputs lead to particular outputs. Addressing transparency requirements involves developing interpretability tools that help users understand model behavior, providing comprehensive documentation about training data and processes, implementing audit mechanisms that can detect problematic outputs, and establishing clear communication about model limitations and appropriate use cases.

Accountability and Responsibility Frameworks

Establishing clear accountability frameworks for LLMs requires defining roles and responsibilities across the AI development lifecycle, from data collection and model training to deployment and ongoing monitoring, while ensuring that appropriate oversight mechanisms are in place. Key accountability considerations include determining liability for AI-generated content that causes harm, establishing oversight mechanisms for high-stakes applications, implementing robust testing and validation procedures before deployment, and creating clear channels for addressing concerns or complaints about AI system behavior. Effective accountability frameworks must address both technical and governance aspects, including human oversight requirements, audit procedures, incident response protocols, and mechanisms for continuous monitoring and improvement.

Mitigating Harmful Content and Misinformation

LLMs pose significant risks for generating harmful content including misinformation, hate speech, violent content, or material that could be used for malicious purposes, requiring robust content filtering, safety measures, and ongoing monitoring to prevent misuse. The challenge of content moderation is complicated by the contextual nature of harm, cultural differences in what constitutes harmful content, and the potential for adversarial users to find ways to circumvent safety measures through prompt engineering or other techniques. Mitigation strategies include implementing multi-layered safety systems that filter both training data and model outputs, developing robust content detection algorithms, establishing clear usage policies and terms of service, providing user education about responsible AI use, and creating feedback mechanisms that enable continuous improvement of safety measures.

Environmental Sustainability and Resource Efficiency

The environmental impact of LLMs represents a critical ethical concern due to the massive computational resources required for training and operating these models, which contribute significantly to carbon emissions and energy consumption. Training large language models can consume as much energy as hundreds of households use in a year, raising questions about the environmental sustainability of AI development and the responsibility to minimize ecological impact. Sustainable AI practices include optimizing model architectures for energy efficiency, using renewable energy sources for training and inference, developing more efficient training algorithms, implementing model compression techniques, and considering the full lifecycle environmental impact of AI systems from development to deployment.

- Energy-Efficient Architectures: Developing model designs that achieve comparable performance with reduced computational requirements

- Renewable Energy Usage: Powering AI training and inference with clean, sustainable energy sources

- Algorithmic Optimization: Creating more efficient training procedures that reduce the time and energy needed for model development

- Model Compression: Implementing techniques to reduce model size while maintaining performance capabilities

- Lifecycle Assessment: Evaluating the complete environmental impact from development through deployment and operation

Healthcare and High-Stakes Applications

The application of LLMs in healthcare and other high-stakes domains raises unique ethical considerations including the need for enhanced accuracy and reliability standards, special attention to bias and fairness in medical contexts, strict privacy and security requirements for sensitive health information, and clear accountability frameworks for AI-assisted medical decision-making. Healthcare applications require additional safeguards including rigorous validation procedures, integration with clinical workflows that maintain human oversight, compliance with medical ethics principles, and specialized training that incorporates medical knowledge and ethical guidelines. Ethical frameworks for healthcare LLMs must address patient safety, informed consent for AI-assisted care, equitable access to AI-powered medical tools, and the preservation of human judgment in critical medical decisions.

Educational Applications and Academic Integrity

LLMs in educational contexts raise complex questions about academic integrity, learning outcomes, educational equity, and the changing nature of knowledge work in an AI-enhanced world. Key ethical considerations include preventing academic dishonesty through unauthorized AI assistance, ensuring equitable access to AI tools across different socioeconomic backgrounds, maintaining the development of critical thinking skills, and adapting educational approaches to incorporate AI literacy while preserving essential learning objectives. Educational institutions must develop comprehensive policies that balance the benefits of AI-assisted learning with the need to maintain academic standards, foster genuine understanding, and prepare students for a future where human-AI collaboration is commonplace.

Balanced Integration Approach

Educational applications require unified ethical frameworks that balance technological advancement with educational integrity, ensuring AI enhances rather than replaces critical thinking and genuine learning.

Legal and Regulatory Considerations

The rapid development and deployment of LLMs has outpaced existing legal frameworks, creating regulatory gaps that need to be addressed through new legislation, updated policies, and international cooperation to ensure responsible AI governance. Current legal challenges include intellectual property questions about AI-generated content, liability issues for AI-caused harms, privacy regulations that may not adequately address AI-specific concerns, and the need for sector-specific regulations in areas like healthcare and finance. Developing effective AI regulation requires balancing innovation with protection, ensuring that legal frameworks are flexible enough to accommodate technological advancement while providing sufficient oversight to prevent harm and protect individual rights.

Global Perspectives and Cultural Sensitivity

Ethical considerations for LLMs must account for diverse global perspectives, cultural values, and social norms, recognizing that ethical standards and acceptable AI behavior may vary across different societies and cultural contexts. Cultural sensitivity in LLM development requires inclusive development teams, diverse training data that represents multiple cultural perspectives, culturally appropriate content moderation policies, and recognition that Western-centric ethical frameworks may not be universally applicable. International cooperation is essential for developing ethical AI standards that respect cultural diversity while establishing common principles for responsible AI development and deployment across global markets.

Stakeholder Engagement and Democratic Participation

Ethical AI development requires meaningful engagement with diverse stakeholders including affected communities, civil society organizations, domain experts, and the general public to ensure that AI systems align with societal values and serve the common good. Participatory approaches to AI ethics include community consultations, public input on AI policies, inclusive design processes that involve marginalized communities, and transparent communication about AI development and deployment decisions. Democratic participation in AI governance helps ensure that the benefits and risks of AI technologies are distributed fairly and that AI development priorities reflect broader social needs rather than narrow commercial interests.

| Stakeholder Group | Key Concerns | Engagement Methods | Expected Outcomes |

|---|---|---|---|

| Affected Communities | Fairness, representation, harm prevention | Community consultations, feedback sessions, advisory panels | Inclusive AI systems, reduced bias, community trust |

| Domain Experts | Technical accuracy, professional standards, safety | Expert panels, professional society input, peer review | High-quality AI systems, professional acceptance, safety standards |

| Civil Society | Human rights, social justice, democratic values | NGO partnerships, advocacy group input, public campaigns | Rights-respecting AI, social benefit, democratic oversight |

| General Public | Privacy, autonomy, societal impact, transparency | Public surveys, citizen panels, educational initiatives | Public understanding, informed consent, democratic legitimacy |

Future Directions and Emerging Research

The field of LLM ethics continues to evolve rapidly with emerging research focusing on advanced bias mitigation techniques, privacy-preserving AI methods, explainable AI for large models, and comprehensive governance frameworks that can adapt to technological advancement. Future research directions include developing more sophisticated fairness metrics, creating AI systems that can explain their reasoning in human-understandable terms, establishing international standards for AI ethics, and exploring novel approaches to AI safety and alignment. The ongoing evolution of LLM capabilities requires continuous adaptation of ethical frameworks and regulatory approaches to address new challenges and opportunities as they emerge.

Implementation Guidelines and Best Practices

Implementing ethical LLM practices requires comprehensive approaches that integrate ethical considerations throughout the AI development lifecycle, from initial planning and data collection through deployment and ongoing monitoring. Best practices include establishing ethics review processes for AI projects, implementing bias testing and mitigation procedures, creating transparent documentation of AI systems, developing incident response protocols, and fostering organizational cultures that prioritize responsible AI development. Organizations should develop clear ethical guidelines, provide ethics training for AI developers, establish oversight mechanisms, and create channels for reporting and addressing ethical concerns.

Measuring Ethical Performance and Impact

Measuring the ethical performance of LLMs requires developing comprehensive metrics and evaluation frameworks that can assess fairness, transparency, safety, and societal impact across diverse applications and contexts. Evaluation approaches include quantitative metrics for bias detection, qualitative assessments of social impact, user feedback mechanisms, and independent auditing procedures that provide external validation of ethical claims. Continuous monitoring and evaluation are essential for identifying emerging ethical issues, assessing the effectiveness of mitigation measures, and adapting ethical practices as AI systems evolve and new challenges emerge.

Conclusion

Ethical considerations for large language models represent one of the most pressing challenges of our technological age, requiring unprecedented collaboration between technologists, ethicists, policymakers, and society at large to ensure these powerful systems serve humanity's best interests. The complexity and scope of ethical challenges—from bias and privacy to transparency and accountability—demand comprehensive approaches that integrate technical solutions with robust governance frameworks, legal protections, and democratic oversight. Success in addressing LLM ethics requires moving beyond reactive problem-solving to proactive ethical design that embeds values of fairness, transparency, and human dignity throughout the AI development process. As LLMs become increasingly capable and ubiquitous, the stakes for getting ethics right continue to rise, making it imperative that we prioritize ethical considerations not as constraints on innovation but as essential foundations for building AI systems that enhance rather than diminish human flourishing. The future of AI depends on our collective ability to navigate these ethical challenges thoughtfully and deliberately, ensuring that the transformative power of large language models is harnessed for the common good while protecting the rights and dignity of all members of society.

Reading Progress

0% completed

Article Insights

Share Article

Quick Actions

Stay Updated

Join 12k+ readers worldwide

Get the latest insights, tutorials, and industry news delivered straight to your inbox. No spam, just quality content.

Unsubscribe at any time. No spam, ever. 🚀