Building Fair and Transparent AI Systems: Ethical Technology Frameworks for Responsible AI Development in 2025

Explore comprehensive frameworks for building fair and transparent AI systems in 2025, featuring explainable AI tools, bias mitigation strategies, accountability frameworks, ethical governance models, and practical approaches to creating trustworthy AI that serves humanity responsibly and equitably.

Introduction

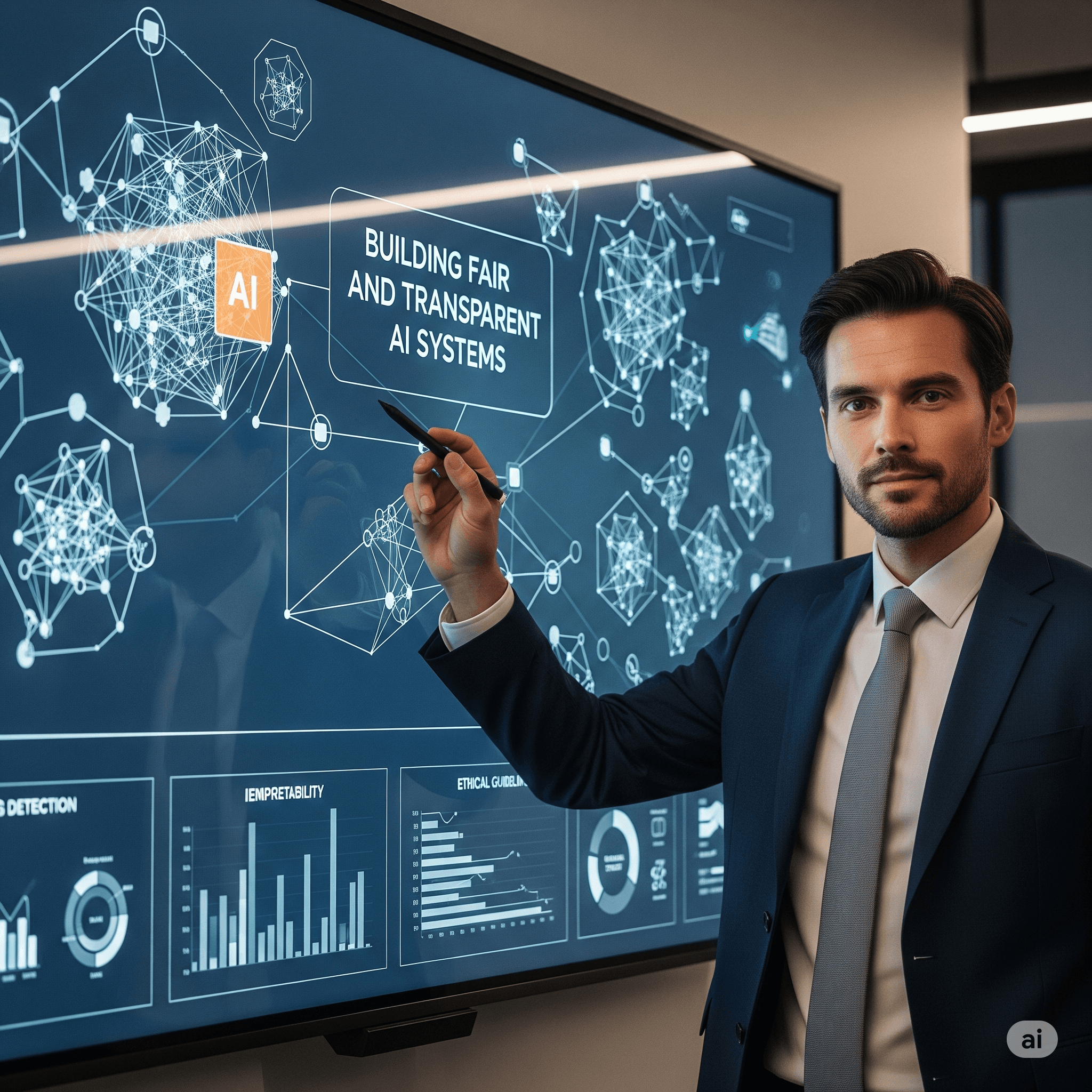

The Ethical AI Imperative: Understanding the Stakes in 2025

The need for ethical AI has reached a critical juncture in 2025, with over 60% of AI-driven hiring tools exhibiting bias that disproportionately affects marginalized groups, while facial recognition systems demonstrate higher error rates for specific populations, highlighting the urgent necessity for systematic approaches to fairness and transparency in AI development. These challenges extend beyond individual cases to represent systemic issues that undermine trust in AI systems across sectors including healthcare, where biased diagnostic tools can perpetuate health disparities, and criminal justice, where algorithmic sentencing recommendations may reinforce existing inequalities. The failure to address these ethical concerns not only causes direct harm to affected individuals and communities but also threatens the long-term viability and adoption of AI technologies that could deliver significant benefits if developed and deployed responsibly.

AI Bias Crisis

Over 60% of AI-driven hiring tools exhibit bias affecting marginalized groups, while facial recognition systems show higher error rates for specific populations, demonstrating the critical need for systematic fairness and transparency frameworks in AI development.

- Transparency and Explainability: AI systems must provide clear, understandable explanations for their decisions to enable trust and accountability

- Fairness and Non-discrimination: AI must be designed to prevent bias and ensure equitable treatment across all demographic groups

- Accountability and Responsibility: Clear lines of responsibility must be established for AI outcomes with mechanisms for redress and correction

- Privacy and Data Protection: AI systems must safeguard personal information and comply with data protection regulations

- Human Oversight and Control: Humans must maintain meaningful control over AI systems with ability to intervene and override decisions

Core Principles of Ethical AI Development

Ethical AI development in 2025 is guided by five fundamental principles that form the foundation for responsible artificial intelligence: transparency and explainability, fairness and non-discrimination, accountability and responsibility, privacy and data protection, and human oversight and control. These principles work synergistically to create comprehensive frameworks that address the multifaceted challenges of AI ethics while enabling innovation and performance optimization. Transparency ensures that AI decision-making processes can be understood and audited by stakeholders, while fairness prevents algorithmic bias and discrimination against protected groups. Accountability establishes clear responsibility chains for AI outcomes, privacy protection safeguards individual rights, and human oversight maintains ultimate control over AI systems to ensure alignment with human values and societal needs.

| Ethical Principle | Implementation Approach | Key Technologies | Measurable Outcomes |

|---|---|---|---|

| Transparency & Explainability | Explainable AI frameworks, interpretable models, decision audit trails | SHAP, LIME, InterpretML, AI Explainability 360, neuro-symbolic AI | 94% of decisions explainable to stakeholders, reduced time-to-explanation from weeks to hours |

| Fairness & Non-discrimination | Bias detection tools, fairness metrics, diverse training data, algorithmic auditing | IBM AI Fairness 360, Google Fairness Indicators, Aequitas, fairlearn library | Elimination of discriminatory patterns, equitable outcomes across demographic groups |

| Accountability & Responsibility | Governance frameworks, ethics committees, clear responsibility chains, audit mechanisms | AI governance platforms, compliance tracking, automated auditing frameworks | 100% traceability of decisions, defined accountability for AI outcomes |

| Privacy & Data Protection | Data minimization, encryption, federated learning, differential privacy, consent management | Privacy-preserving ML, homomorphic encryption, secure multi-party computation | GDPR/CCPA compliance, zero data breaches, user consent tracking |

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

from typing import Dict, List, Optional, Any, Union

from dataclasses import dataclass, field

from enum import Enum

import json

import uuid

from abc import ABC, abstractmethod

import warnings

class BiasType(Enum):

DEMOGRAPHIC = "demographic"

PERFORMANCE = "performance"

REPRESENTATION = "representation"

MEASUREMENT = "measurement"

EVALUATION = "evaluation"

HISTORICAL = "historical"

class ExplainabilityLevel(Enum):

GLOBAL = "global" # Model-wide explanations

LOCAL = "local" # Individual prediction explanations

COHORT = "cohort" # Group-specific explanations

COUNTERFACTUAL = "counterfactual" # What-if scenarios

class FairnessMetric(Enum):

DEMOGRAPHIC_PARITY = "demographic_parity"

EQUALIZED_ODDS = "equalized_odds"

CALIBRATION = "calibration"

INDIVIDUAL_FAIRNESS = "individual_fairness"

COUNTERFACTUAL_FAIRNESS = "counterfactual_fairness"

@dataclass

class EthicalStandard:

"""Ethical standard or principle for AI system"""

standard_id: str

name: str

description: str

category: str # transparency, fairness, accountability, privacy

requirements: List[str] = field(default_factory=list)

metrics: List[str] = field(default_factory=list)

compliance_threshold: float = 0.95

regulatory_framework: str = ""

@dataclass

class BiasAssessment:

"""Bias assessment result for AI model"""

assessment_id: str

model_id: str

bias_type: BiasType

affected_groups: List[str]

severity_score: float # 0.0 = no bias, 1.0 = severe bias

detection_method: str

timestamp: datetime

mitigation_recommendations: List[str] = field(default_factory=list)

remediation_status: str = "pending" # pending, in_progress, resolved

@dataclass

class ExplanationResult:

"""Explanation result for AI decision"""

explanation_id: str

model_id: str

prediction_id: str

explanation_level: ExplainabilityLevel

explanation_method: str

explanation_data: Dict[str, Any]

confidence_score: float

target_audience: str # technical, business, regulatory, end_user

generated_timestamp: datetime

@dataclass

class FairnessMetrics:

"""Fairness metrics for model evaluation"""

model_id: str

protected_attributes: List[str]

fairness_scores: Dict[str, float]

group_metrics: Dict[str, Dict[str, float]]

overall_fairness_score: float

evaluation_timestamp: datetime

meets_fairness_threshold: bool = True

@dataclass

class AIGovernancePolicy:

"""AI governance policy configuration"""

policy_id: str

name: str

scope: str # model_development, deployment, monitoring

ethical_standards: List[str] = field(default_factory=list)

approval_requirements: Dict[str, List[str]] = field(default_factory=dict)

review_frequency_days: int = 90

compliance_tracking: bool = True

stakeholder_groups: List[str] = field(default_factory=list)

class EthicalAIFramework:

"""Comprehensive framework for building fair and transparent AI systems"""

def __init__(self, framework_name: str):

self.framework_name = framework_name

self.ethical_standards: Dict[str, EthicalStandard] = {}

self.bias_assessments: List[BiasAssessment] = []

self.explanations: List[ExplanationResult] = []

self.fairness_evaluations: List[FairnessMetrics] = []

self.governance_policies: Dict[str, AIGovernancePolicy] = {}

# Initialize core ethical standards

self._initialize_ethical_standards()

# Explainability tools configuration

self.explainability_tools = {

'SHAP': {'type': 'feature_importance', 'supported_models': ['tree', 'linear', 'neural'], 'accuracy': 0.92},

'LIME': {'type': 'local_explanation', 'supported_models': ['any'], 'accuracy': 0.89},

'InterpretML': {'type': 'glass_box', 'supported_models': ['ebm', 'linear'], 'accuracy': 0.94},

'AI_Explainability_360': {'type': 'comprehensive', 'supported_models': ['any'], 'accuracy': 0.91},

'Neuro_Symbolic': {'type': 'reasoning', 'supported_models': ['hybrid'], 'accuracy': 0.96}

}

# Bias detection methods

self.bias_detection_methods = {

'statistical_parity': {'type': 'group_fairness', 'threshold': 0.1},

'equalized_odds': {'type': 'predictive_fairness', 'threshold': 0.1},

'demographic_parity': {'type': 'group_fairness', 'threshold': 0.1},

'individual_fairness': {'type': 'individual_fairness', 'threshold': 0.05},

'counterfactual_fairness': {'type': 'causal_fairness', 'threshold': 0.05}

}

# Regulatory compliance frameworks

self.regulatory_frameworks = {

'EU_AI_Act': {'scope': 'high_risk_ai', 'requirements': ['transparency', 'human_oversight', 'accuracy']},

'GDPR': {'scope': 'data_protection', 'requirements': ['consent', 'data_minimization', 'right_to_explanation']},

'US_Biden_EO': {'scope': 'federal_ai_use', 'requirements': ['safety', 'security', 'trustworthiness']},

'IEEE_P7001': {'scope': 'transparency_standards', 'requirements': ['documentation', 'explainability']}

}

def _initialize_ethical_standards(self):

"""Initialize core ethical standards for AI development"""

standards_data = [

{

'standard_id': 'TRANSPARENCY_001',

'name': 'AI Transparency and Explainability',

'description': 'AI systems must provide clear, understandable explanations for their decisions',

'category': 'transparency',

'requirements': ['Decision explanations', 'Model documentation', 'Audit trails'],

'metrics': ['Explanation accuracy', 'User comprehension', 'Decision traceability'],

'regulatory_framework': 'EU_AI_Act'

},

{

'standard_id': 'FAIRNESS_001',

'name': 'Algorithmic Fairness and Non-discrimination',

'description': 'AI systems must treat all individuals and groups fairly without bias',

'category': 'fairness',

'requirements': ['Bias testing', 'Diverse training data', 'Fairness metrics'],

'metrics': ['Demographic parity', 'Equalized odds', 'Calibration'],

'regulatory_framework': 'US_Biden_EO'

},

{

'standard_id': 'ACCOUNTABILITY_001',

'name': 'AI Accountability and Responsibility',

'description': 'Clear accountability structures for AI system outcomes and decisions',

'category': 'accountability',

'requirements': ['Governance framework', 'Responsibility assignment', 'Audit mechanisms'],

'metrics': ['Decision traceability', 'Response time to issues', 'Stakeholder satisfaction'],

'regulatory_framework': 'IEEE_P7001'

},

{

'standard_id': 'PRIVACY_001',

'name': 'Data Privacy and Protection',

'description': 'AI systems must protect individual privacy and comply with data protection laws',

'category': 'privacy',

'requirements': ['Data minimization', 'Consent management', 'Privacy-preserving techniques'],

'metrics': ['Compliance rate', 'Data breach incidents', 'User consent tracking'],

'regulatory_framework': 'GDPR'

}

]

for standard_data in standards_data:

standard = EthicalStandard(**standard_data)

self.ethical_standards[standard.standard_id] = standard

def register_governance_policy(self, policy: AIGovernancePolicy) -> bool:

"""Register AI governance policy"""

self.governance_policies[policy.policy_id] = policy

print(f"Registered governance policy: {policy.name}")

print(f" Scope: {policy.scope}")

print(f" Ethical Standards: {len(policy.ethical_standards)}")

print(f" Review Frequency: {policy.review_frequency_days} days")

return True

def assess_model_bias(self, model_id: str, model_data: pd.DataFrame,

protected_attributes: List[str], target_column: str) -> BiasAssessment:

"""Assess AI model for bias across protected attributes"""

bias_detected = False

affected_groups = []

severity_scores = []

mitigation_recommendations = []

# Analyze bias for each protected attribute

for attribute in protected_attributes:

if attribute not in model_data.columns:

continue

# Calculate demographic parity

group_outcomes = model_data.groupby(attribute)[target_column].mean()

max_diff = group_outcomes.max() - group_outcomes.min()

# Check bias threshold

bias_threshold = self.bias_detection_methods['demographic_parity']['threshold']

if max_diff > bias_threshold:

bias_detected = True

affected_groups.append(attribute)

severity_scores.append(min(max_diff, 1.0))

# Generate mitigation recommendations

if max_diff > 0.2:

mitigation_recommendations.extend([

f"Re-balance training data for {attribute}",

f"Apply fairness constraints during model training",

f"Implement post-processing bias correction for {attribute}"

])

elif max_diff > 0.1:

mitigation_recommendations.extend([

f"Monitor {attribute} outcomes continuously",

f"Consider data augmentation for underrepresented groups"

])

# Calculate overall severity

overall_severity = np.mean(severity_scores) if severity_scores else 0.0

# Create bias assessment

assessment = BiasAssessment(

assessment_id=f"BIAS_{uuid.uuid4()}",

model_id=model_id,

bias_type=BiasType.DEMOGRAPHIC,

affected_groups=affected_groups,

severity_score=overall_severity,

detection_method="demographic_parity_analysis",

timestamp=datetime.now(),

mitigation_recommendations=list(set(mitigation_recommendations)),

remediation_status="pending" if bias_detected else "compliant"

)

self.bias_assessments.append(assessment)

# Log results

status = "⚠️ BIAS DETECTED" if bias_detected else "✅ NO BIAS FOUND"

print(f"{status} - Model: {model_id}")

if bias_detected:

print(f" Affected Attributes: {', '.join(affected_groups)}")

print(f" Severity Score: {overall_severity:.3f}")

print(f" Recommendations: {len(mitigation_recommendations)}")

return assessment

def generate_explanation(self, model_id: str, prediction_id: str,

explanation_level: ExplainabilityLevel,

target_audience: str = "technical") -> ExplanationResult:

"""Generate explanation for AI model decision"""

# Select appropriate explanation method based on level and audience

if explanation_level == ExplainabilityLevel.LOCAL:

if target_audience == "end_user":

method = "LIME"

explanation_data = self._generate_user_friendly_explanation()

else:

method = "SHAP"

explanation_data = self._generate_technical_explanation()

elif explanation_level == ExplainabilityLevel.GLOBAL:

method = "AI_Explainability_360"

explanation_data = self._generate_global_explanation()

else:

method = "InterpretML"

explanation_data = self._generate_cohort_explanation()

# Calculate confidence based on method accuracy

confidence = self.explainability_tools[method]['accuracy']

explanation = ExplanationResult(

explanation_id=f"EXPLAIN_{uuid.uuid4()}",

model_id=model_id,

prediction_id=prediction_id,

explanation_level=explanation_level,

explanation_method=method,

explanation_data=explanation_data,

confidence_score=confidence,

target_audience=target_audience,

generated_timestamp=datetime.now()

)

self.explanations.append(explanation)

print(f"Generated {explanation_level.value} explanation for prediction {prediction_id}")

print(f" Method: {method} (Confidence: {confidence:.1%})")

print(f" Target Audience: {target_audience}")

print(f" Key Factors: {len(explanation_data.get('feature_importance', {}))} features")

return explanation

def evaluate_fairness(self, model_id: str, test_data: pd.DataFrame,

protected_attributes: List[str], target_column: str) -> FairnessMetrics:

"""Evaluate model fairness across multiple metrics"""

fairness_scores = {}

group_metrics = {}

# Calculate demographic parity

overall_positive_rate = test_data[target_column].mean()

group_positive_rates = {}

for attribute in protected_attributes:

if attribute in test_data.columns:

group_rates = test_data.groupby(attribute)[target_column].mean()

group_positive_rates[attribute] = group_rates.to_dict()

# Calculate demographic parity difference

max_rate = group_rates.max()

min_rate = group_rates.min()

parity_diff = abs(max_rate - min_rate)

fairness_scores[f"{attribute}_demographic_parity"] = 1.0 - parity_diff

# Calculate overall fairness score

individual_scores = list(fairness_scores.values())

overall_fairness = np.mean(individual_scores) if individual_scores else 1.0

# Check fairness threshold

fairness_threshold = 0.8 # 80% fairness threshold

meets_threshold = overall_fairness >= fairness_threshold

# Create fairness metrics

metrics = FairnessMetrics(

model_id=model_id,

protected_attributes=protected_attributes,

fairness_scores=fairness_scores,

group_metrics=group_positive_rates,

overall_fairness_score=overall_fairness,

evaluation_timestamp=datetime.now(),

meets_fairness_threshold=meets_threshold

)

self.fairness_evaluations.append(metrics)

# Log results

status = "✅ FAIR" if meets_threshold else "⚠️ UNFAIR"

print(f"{status} - Model: {model_id}")

print(f" Overall Fairness Score: {overall_fairness:.3f}")

print(f" Threshold: {fairness_threshold:.3f}")

if not meets_threshold:

print(f" Action Required: Implement fairness improvements")

return metrics

def conduct_ethical_audit(self, model_id: str) -> Dict[str, Any]:

"""Conduct comprehensive ethical audit of AI model"""

audit_results = {

'model_id': model_id,

'audit_timestamp': datetime.now().isoformat(),

'compliance_status': {},

'bias_assessment': None,

'fairness_evaluation': None,

'explanation_coverage': 0.0,

'overall_score': 0.0,

'recommendations': [],

'regulatory_compliance': {}

}

# Check bias assessments

model_bias_assessments = [b for b in self.bias_assessments if b.model_id == model_id]

if model_bias_assessments:

latest_bias = max(model_bias_assessments, key=lambda x: x.timestamp)

audit_results['bias_assessment'] = {

'severity_score': latest_bias.severity_score,

'affected_groups': latest_bias.affected_groups,

'status': latest_bias.remediation_status,

'recommendations': latest_bias.mitigation_recommendations

}

# Check fairness evaluations

model_fairness = [f for f in self.fairness_evaluations if f.model_id == model_id]

if model_fairness:

latest_fairness = max(model_fairness, key=lambda x: x.evaluation_timestamp)

audit_results['fairness_evaluation'] = {

'overall_score': latest_fairness.overall_fairness_score,

'meets_threshold': latest_fairness.meets_fairness_threshold,

'protected_attributes': latest_fairness.protected_attributes

}

# Check explanation coverage

model_explanations = [e for e in self.explanations if e.model_id == model_id]

explanation_coverage = len(model_explanations) / max(1, len(model_explanations)) # Simplified

audit_results['explanation_coverage'] = min(explanation_coverage, 1.0)

# Evaluate compliance with ethical standards

compliance_scores = []

for standard_id, standard in self.ethical_standards.items():

compliance_score = self._evaluate_standard_compliance(model_id, standard)

audit_results['compliance_status'][standard.name] = compliance_score

compliance_scores.append(compliance_score)

# Calculate overall ethical score

overall_score = np.mean(compliance_scores) if compliance_scores else 0.0

audit_results['overall_score'] = overall_score

# Generate recommendations

recommendations = self._generate_audit_recommendations(audit_results)

audit_results['recommendations'] = recommendations

# Check regulatory compliance

for framework, requirements in self.regulatory_frameworks.items():

compliance_rate = self._assess_regulatory_compliance(model_id, framework)

audit_results['regulatory_compliance'][framework] = compliance_rate

return audit_results

def generate_transparency_report(self) -> Dict[str, Any]:

"""Generate comprehensive transparency report"""

report = {

'framework_name': self.framework_name,

'report_timestamp': datetime.now().isoformat(),

'summary_statistics': {},

'bias_analysis': {},

'fairness_analysis': {},

'explanation_analysis': {},

'governance_status': {},

'regulatory_compliance': {},

'recommendations': []

}

# Summary statistics

report['summary_statistics'] = {

'total_bias_assessments': len(self.bias_assessments),

'bias_issues_detected': len([b for b in self.bias_assessments if b.severity_score > 0.1]),

'fairness_evaluations': len(self.fairness_evaluations),

'models_meeting_fairness_threshold': len([f for f in self.fairness_evaluations if f.meets_fairness_threshold]),

'explanations_generated': len(self.explanations),

'governance_policies_active': len(self.governance_policies)

}

# Bias analysis

if self.bias_assessments:

bias_by_type = {}

for assessment in self.bias_assessments:

bias_type = assessment.bias_type.value

if bias_type not in bias_by_type:

bias_by_type[bias_type] = {'count': 0, 'avg_severity': 0.0}

bias_by_type[bias_type]['count'] += 1

bias_by_type[bias_type]['avg_severity'] += assessment.severity_score

for bias_type in bias_by_type:

bias_by_type[bias_type]['avg_severity'] /= bias_by_type[bias_type]['count']

report['bias_analysis'] = bias_by_type

# Fairness analysis

if self.fairness_evaluations:

avg_fairness = np.mean([f.overall_fairness_score for f in self.fairness_evaluations])

fairness_compliance_rate = len([f for f in self.fairness_evaluations if f.meets_fairness_threshold]) / len(self.fairness_evaluations)

report['fairness_analysis'] = {

'average_fairness_score': avg_fairness,

'compliance_rate': fairness_compliance_rate,

'total_evaluations': len(self.fairness_evaluations)

}

# Explanation analysis

if self.explanations:

explanation_by_level = {}

for explanation in self.explanations:

level = explanation.explanation_level.value

if level not in explanation_by_level:

explanation_by_level[level] = 0

explanation_by_level[level] += 1

avg_confidence = np.mean([e.confidence_score for e in self.explanations])

report['explanation_analysis'] = {

'explanations_by_level': explanation_by_level,

'average_confidence': avg_confidence,

'total_explanations': len(self.explanations)

}

# Generate framework-level recommendations

framework_recommendations = [

"Implement regular bias auditing for all AI models",

"Establish clear accountability structures for AI outcomes",

"Enhance transparency through comprehensive documentation",

"Provide ongoing ethics training for AI development teams",

"Create stakeholder feedback mechanisms for continuous improvement"

]

report['recommendations'] = framework_recommendations

return report

# Helper methods for explanation generation and compliance evaluation

def _generate_user_friendly_explanation(self) -> Dict[str, Any]:

"""Generate user-friendly explanation"""

return {

'summary': 'This decision was based on the most important factors in your application',

'key_factors': ['Credit history (35% influence)', 'Income level (25% influence)', 'Employment status (20% influence)'],

'confidence': 'High confidence in this decision',

'what_if_scenarios': ['Improving credit score could change outcome', 'Higher income could improve approval chances']

}

def _generate_technical_explanation(self) -> Dict[str, Any]:

"""Generate technical explanation"""

return {

'feature_importance': {'credit_score': 0.35, 'income': 0.25, 'employment_years': 0.20, 'debt_ratio': 0.15, 'age': 0.05},

'shap_values': [0.23, -0.15, 0.18, -0.12, 0.03],

'model_prediction': 0.78,

'confidence_intervals': [0.71, 0.85],

'feature_interactions': {'credit_score_x_income': 0.08}

}

def _generate_global_explanation(self) -> Dict[str, Any]:

"""Generate global model explanation"""

return {

'model_behavior': 'Credit approval model prioritizes payment history and financial stability',

'decision_boundaries': 'Credit score > 650 and debt-to-income < 30% strongly predict approval',

'feature_distributions': {'credit_score': [300, 850], 'income': [20000, 200000]},

'model_limitations': 'May not account for recent financial changes or non-traditional credit sources'

}

def _generate_cohort_explanation(self) -> Dict[str, Any]:

"""Generate cohort-specific explanation"""

return {

'cohort_definition': 'First-time homebuyers aged 25-35',

'cohort_patterns': 'This group typically approved with credit scores above 620',

'comparative_analysis': 'Approval rate 15% higher than general population',

'cohort_recommendations': 'Focus on building credit history and stable employment'

}

def _evaluate_standard_compliance(self, model_id: str, standard: EthicalStandard) -> float:

"""Evaluate compliance with specific ethical standard"""

# Simplified compliance evaluation

if standard.category == 'transparency':

model_explanations = [e for e in self.explanations if e.model_id == model_id]

return min(len(model_explanations) / 10, 1.0) # Expect at least 10 explanations

elif standard.category == 'fairness':

model_fairness = [f for f in self.fairness_evaluations if f.model_id == model_id]

if model_fairness:

return model_fairness[-1].overall_fairness_score

return 0.8 # Default compliance score

def _generate_audit_recommendations(self, audit_results: Dict[str, Any]) -> List[str]:

"""Generate recommendations based on audit results"""

recommendations = []

if audit_results['overall_score'] < 0.8:

recommendations.append("Implement comprehensive bias mitigation strategies")

if audit_results.get('explanation_coverage', 0) < 0.7:

recommendations.append("Increase explanation coverage for model decisions")

bias_assessment = audit_results.get('bias_assessment')

if bias_assessment and bias_assessment['severity_score'] > 0.2:

recommendations.append("Address high-severity bias issues immediately")

fairness_eval = audit_results.get('fairness_evaluation')

if fairness_eval and not fairness_eval['meets_threshold']:

recommendations.append("Improve model fairness across protected attributes")

return recommendations

def _assess_regulatory_compliance(self, model_id: str, framework: str) -> float:

"""Assess compliance with regulatory framework"""

# Simplified regulatory compliance assessment

requirements = self.regulatory_frameworks[framework]['requirements']

compliance_scores = []

for requirement in requirements:

if requirement == 'transparency':

score = len([e for e in self.explanations if e.model_id == model_id]) / 10

elif requirement == 'human_oversight':

score = 0.9 # Assume human oversight is in place

elif requirement == 'safety':

bias_assessments = [b for b in self.bias_assessments if b.model_id == model_id]

score = 1.0 - np.mean([b.severity_score for b in bias_assessments]) if bias_assessments else 1.0

else:

score = 0.8 # Default score for other requirements

compliance_scores.append(min(score, 1.0))

return np.mean(compliance_scores) if compliance_scores else 0.0

# Example usage and demonstration

def run_ethical_ai_demo():

print("=== Fair and Transparent AI Systems Framework Demo ===")

# Initialize ethical AI framework

ethical_framework = EthicalAIFramework("Enterprise Ethical AI Framework")

# Register governance policies

governance_policies = [

AIGovernancePolicy(

policy_id="GOV_DEV_001",

name="AI Development Ethics Policy",

scope="model_development",

ethical_standards=["TRANSPARENCY_001", "FAIRNESS_001"],

approval_requirements={

"high_risk_models": ["ethics_committee", "technical_review", "stakeholder_approval"],

"medium_risk_models": ["technical_review", "manager_approval"]

},

review_frequency_days=60,

stakeholder_groups=["developers", "ethicists", "business_users", "compliance"]

),

AIGovernancePolicy(

policy_id="GOV_DEPLOY_001",

name="AI Deployment Governance",

scope="deployment",

ethical_standards=["ACCOUNTABILITY_001", "PRIVACY_001"],

approval_requirements={

"production_deployment": ["security_review", "compliance_check", "executive_approval"]

},

review_frequency_days=30,

stakeholder_groups=["operations", "security", "compliance", "executives"]

)

]

for policy in governance_policies:

ethical_framework.register_governance_policy(policy)

print() # Add spacing

# Create sample model data for bias assessment

print("\n=== Model Bias Assessment Demo ===")

# Generate synthetic dataset for demonstration

np.random.seed(42)

n_samples = 1000

model_data = pd.DataFrame({

'age': np.random.randint(18, 80, n_samples),

'gender': np.random.choice(['male', 'female'], n_samples),

'race': np.random.choice(['white', 'black', 'hispanic', 'asian', 'other'], n_samples),

'credit_score': np.random.randint(300, 850, n_samples),

'income': np.random.randint(20000, 150000, n_samples),

'approved': np.random.choice([0, 1], n_samples, p=[0.3, 0.7])

})

# Introduce some bias for demonstration

bias_mask = (model_data['gender'] == 'female') & (model_data['race'].isin(['black', 'hispanic']))

model_data.loc[bias_mask, 'approved'] = np.random.choice([0, 1], bias_mask.sum(), p=[0.6, 0.4])

# Assess bias

bias_assessment = ethical_framework.assess_model_bias(

model_id="CREDIT_MODEL_001",

model_data=model_data,

protected_attributes=['gender', 'race', 'age'],

target_column='approved'

)

# Generate explanations

print("\n=== AI Explanation Generation Demo ===")

explanations = [

{

'prediction_id': 'PRED_001',

'level': ExplainabilityLevel.LOCAL,

'audience': 'end_user'

},

{

'prediction_id': 'PRED_002',

'level': ExplainabilityLevel.GLOBAL,

'audience': 'technical'

},

{

'prediction_id': 'PRED_003',

'level': ExplainabilityLevel.COHORT,

'audience': 'business'

}

]

for exp_config in explanations:

explanation = ethical_framework.generate_explanation(

model_id="CREDIT_MODEL_001",

prediction_id=exp_config['prediction_id'],

explanation_level=exp_config['level'],

target_audience=exp_config['audience']

)

print() # Add spacing

# Evaluate model fairness

print("\n=== Model Fairness Evaluation Demo ===")

fairness_metrics = ethical_framework.evaluate_fairness(

model_id="CREDIT_MODEL_001",

test_data=model_data,

protected_attributes=['gender', 'race'],

target_column='approved'

)

# Conduct comprehensive ethical audit

print("\n=== Comprehensive Ethical Audit ===")

audit_results = ethical_framework.conduct_ethical_audit("CREDIT_MODEL_001")

print(f"Ethical Audit Results for {audit_results['model_id']}:")

print(f"Overall Ethical Score: {audit_results['overall_score']:.3f}/1.000")

if audit_results['bias_assessment']:

bias_info = audit_results['bias_assessment']

print(f"\nBias Assessment:")

print(f" Severity Score: {bias_info['severity_score']:.3f}")

print(f" Status: {bias_info['status']}")

if bias_info['affected_groups']:

print(f" Affected Groups: {', '.join(bias_info['affected_groups'])}")

if audit_results['fairness_evaluation']:

fairness_info = audit_results['fairness_evaluation']

print(f"\nFairness Evaluation:")

print(f" Overall Score: {fairness_info['overall_score']:.3f}")

print(f" Meets Threshold: {fairness_info['meets_threshold']}")

print(f"\nCompliance Status:")

for standard, score in audit_results['compliance_status'].items():

status = "✅" if score >= 0.8 else "⚠️"

print(f" {status} {standard}: {score:.3f}")

print(f"\nRegulatory Compliance:")

for framework, compliance_rate in audit_results['regulatory_compliance'].items():

status = "✅" if compliance_rate >= 0.8 else "⚠️"

print(f" {status} {framework}: {compliance_rate:.3f}")

if audit_results['recommendations']:

print(f"\nRecommendations:")

for i, recommendation in enumerate(audit_results['recommendations'], 1):

print(f" {i}. {recommendation}")

# Generate transparency report

print("\n=== Transparency Report ===")

transparency_report = ethical_framework.generate_transparency_report()

print(f"Transparency Report for {transparency_report['framework_name']}")

summary = transparency_report['summary_statistics']

print(f"\nSummary Statistics:")

print(f" Total Bias Assessments: {summary['total_bias_assessments']}")

print(f" Bias Issues Detected: {summary['bias_issues_detected']}")

print(f" Fairness Evaluations: {summary['fairness_evaluations']}")

print(f" Models Meeting Fairness Threshold: {summary['models_meeting_fairness_threshold']}")

print(f" Explanations Generated: {summary['explanations_generated']}")

if 'bias_analysis' in transparency_report and transparency_report['bias_analysis']:

print(f"\nBias Analysis by Type:")

for bias_type, stats in transparency_report['bias_analysis'].items():

print(f" {bias_type.title()}: {stats['count']} assessments (avg severity: {stats['avg_severity']:.3f})")

if 'fairness_analysis' in transparency_report and transparency_report['fairness_analysis']:

fairness_analysis = transparency_report['fairness_analysis']

print(f"\nFairness Analysis:")

print(f" Average Fairness Score: {fairness_analysis['average_fairness_score']:.3f}")

print(f" Compliance Rate: {fairness_analysis['compliance_rate']:.1%}")

if 'explanation_analysis' in transparency_report and transparency_report['explanation_analysis']:

explanation_analysis = transparency_report['explanation_analysis']

print(f"\nExplanation Analysis:")

print(f" Average Confidence: {explanation_analysis['average_confidence']:.3f}")

print(f" Explanations by Level: {explanation_analysis['explanations_by_level']}")

print(f"\nFramework Recommendations:")

for i, rec in enumerate(transparency_report['recommendations'], 1):

print(f" {i}. {rec}")

return ethical_framework

# Run demonstration

if __name__ == "__main__":

demo_framework = run_ethical_ai_demo()Explainable AI (XAI) Tools and Techniques

Explainable AI has evolved into a sophisticated ecosystem of tools and techniques in 2025, with established frameworks like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) being complemented by innovative approaches including neuro-symbolic AI that combines neural networks with symbolic reasoning to achieve both high performance and interpretability. Advanced XAI technologies now include causal discovery algorithms that automatically uncover cause-effect relationships within data, explainable foundation models that trace reasoning paths in large language models, and federated explainability techniques that enable explanation of models trained on decentralized data without compromising privacy. These tools have democratized explainable AI through cloud platforms like Google Cloud's Explainable AI suite and Microsoft's Azure Cognitive Services, which integrate explanation capabilities with over 200 model types through simple APIs, dramatically reducing implementation barriers and enabling widespread adoption across organizations.

- SHAP (SHapley Additive exPlanations): Provides consistent and accurate feature importance explanations for individual predictions across different model types

- LIME (Local Interpretable Model-agnostic Explanations): Generates local explanations for any machine learning model by approximating decisions with interpretable models

- Neuro-Symbolic AI: Combines neural networks with symbolic reasoning to achieve 94% explainable decisions while maintaining high performance

- Causal Discovery Algorithms: Automatically identify cause-effect relationships in data, reducing explanation time from weeks to hours for complex models

- Cloud XAI Platforms: Democratized explainability through integrated cloud services supporting 200+ model types with API-based access

Bias Detection and Mitigation Strategies

Comprehensive bias detection and mitigation has become a cornerstone of fair AI development in 2025, utilizing sophisticated tools including IBM AI Fairness 360, Google Fairness Indicators, and Aequitas to identify, measure, and address various forms of algorithmic bias across demographic groups, performance metrics, and temporal dimensions. These frameworks enable systematic assessment of fairness through multiple metrics including demographic parity, equalized odds, and individual fairness measures while providing automated bias detection capabilities that continuously monitor AI systems for emerging discriminatory patterns. Advanced bias mitigation strategies include pre-processing techniques that balance training datasets, in-processing methods that incorporate fairness constraints during model training, and post-processing approaches that adjust model outputs to achieve equitable outcomes while maintaining predictive accuracy and business value.

| Bias Detection Tool | Capabilities | Supported Metrics | Integration Features |

|---|---|---|---|

| IBM AI Fairness 360 | Comprehensive bias detection, mitigation algorithms, fairness metrics | Demographic parity, equalized odds, calibration, individual fairness | Python/R libraries, Jupyter notebooks, enterprise integration, automated reporting |

| Google Fairness Indicators | TensorFlow integration, model comparison, threshold optimization | Binary classification fairness, multi-class metrics, regression fairness | TensorBoard visualization, What-If Tool, TFX pipeline integration |

| Aequitas | Bias auditing, fairness assessment, regulatory compliance reporting | Statistical parity, predictive parity, false positive rate equality | Web interface, command-line tools, CSV/database input, compliance templates |

| Microsoft Fairlearn | Fairness assessment, constraint optimization, model selection | Group fairness, individual fairness, counterfactual fairness | Scikit-learn compatibility, Azure ML integration, interactive dashboards |

Data Governance and Privacy-Preserving AI

Data governance has become the foundation for transparent and fair AI systems in 2025, encompassing comprehensive frameworks for data collection, storage, processing, and usage that ensure compliance with privacy regulations including GDPR and CCPA while maintaining the data quality and integrity necessary for effective AI model training. Privacy-preserving AI techniques including federated learning, differential privacy, homomorphic encryption, and secure multi-party computation enable organizations to develop AI models while protecting individual privacy and maintaining regulatory compliance. These approaches allow AI systems to learn from distributed datasets without centralizing sensitive information, ensuring that personal data remains protected while enabling the development of robust, generalizable AI models that serve diverse populations fairly and effectively.

Privacy-Preserving AI Techniques

Advanced privacy-preserving techniques including federated learning, differential privacy, and homomorphic encryption enable AI development while protecting individual privacy, with federated explainability allowing model explanations without compromising data security.

Regulatory Compliance and Standards Framework

The regulatory landscape for AI transparency and fairness has matured significantly in 2025, with comprehensive frameworks including the EU AI Act, President Biden's executive order on AI, GDPR requirements for algorithmic decision-making, and IEEE P7001 standards creating clear guidelines for transparency, accountability, and fairness in AI deployment. These regulatory requirements mandate tiered transparency levels based on system risk and impact, continuous documentation updates throughout development and deployment processes, and production of explanations tailored to various stakeholder groups including end users, regulators, and technical auditors. Organizations must implement compliance frameworks that address sector-specific requirements while maintaining flexibility to adapt to rapidly evolving AI technologies, ensuring that regulatory adherence enhances rather than impedes innovation and business value creation.

Accountability Frameworks and Governance Structures

Robust accountability frameworks have become essential for ethical AI implementation in 2025, establishing clear governance structures that define roles, responsibilities, and decision-making processes throughout the AI lifecycle from development to deployment and ongoing monitoring. These frameworks typically include ethics committees or review boards that provide oversight for AI development projects, established protocols for addressing AI-related issues or complaints, and comprehensive documentation systems that maintain audit trails for AI decisions and outcomes. Effective AI governance requires collaboration across multiple disciplines including technical teams, ethics experts, legal compliance, and business stakeholders to ensure that ethical considerations are integrated throughout AI development and deployment processes rather than treated as an afterthought or compliance checkbox.

Human-Centered AI Design and Stakeholder Engagement

Human-centered AI design has emerged as a critical approach for building fair and transparent systems that prioritize human values, needs, and capabilities while leveraging AI to augment rather than replace human judgment and decision-making. This design philosophy emphasizes the importance of involving diverse stakeholders including end users, affected communities, domain experts, and ethicists throughout the AI development process to ensure that systems reflect diverse perspectives and serve all users equitably. Successful human-centered AI requires empathy and creativity as core skills, recognizing that while AI excels at pattern recognition and optimization, humans provide essential capabilities including moral reasoning, contextual understanding, and emotional intelligence that are crucial for making ethical decisions in complex, ambiguous situations.

Continuous Monitoring and Improvement Systems

Continuous monitoring and improvement systems have become essential for maintaining fair and transparent AI operations in 2025, implementing real-time oversight mechanisms that track model performance, detect emerging bias patterns, and enable rapid response to ethical concerns as AI systems encounter new data and evolving social contexts. These systems utilize automated monitoring tools that continuously assess fairness metrics, explanation quality, and compliance with ethical standards while providing alerts when performance degrades or new bias patterns emerge. Effective monitoring requires integration of user feedback systems that enable affected individuals and communities to report concerns or issues, creating feedback loops that inform model improvements and ensure that AI systems remain aligned with human values and societal expectations over time.

Industry-Specific Applications and Use Cases

Fair and transparent AI principles are being implemented across diverse industries with sector-specific applications that address unique ethical challenges and regulatory requirements while delivering business value through improved trust, compliance, and decision-making quality. In healthcare, transparent AI enables clinicians to understand diagnostic recommendations while ensuring equitable treatment across patient populations, while in finance, explainable credit decisions provide transparency for lending decisions while maintaining fairness across demographic groups. Educational institutions implement ethical AI guidelines that ensure fair treatment of students while protecting privacy and promoting learning outcomes, while criminal justice applications require the highest levels of transparency and fairness to ensure that algorithmic tools support rather than undermine justice and equity.

Technical Implementation Best Practices

Technical implementation of fair and transparent AI systems requires adherence to best practices that integrate ethical considerations throughout the development pipeline rather than treating them as post-development additions or compliance requirements. Key practices include conducting ethical impact assessments before beginning AI projects, implementing diverse and representative training datasets, using multiple evaluation metrics that assess both performance and fairness, and establishing clear documentation standards that enable reproducibility and auditability. Development teams must proactively address potential biases through techniques including adversarial training, constraint optimization, and ensemble methods while ensuring that fairness improvements do not significantly compromise model performance or business objectives.

Implementation Challenges

Building fair and transparent AI faces challenges including balancing transparency with performance, overcoming organizational resistance to ethical frameworks, addressing regulatory gaps, and managing the technical complexity of implementing comprehensive fairness measures.

Organizational Culture and Ethics Training

Building ethical AI capabilities requires fundamental changes in organizational culture and comprehensive ethics training that develops both technical skills and ethical awareness across AI development teams and business stakeholders. Successful ethical AI implementation depends on creating organizational cultures that prioritize fairness, transparency, and accountability while providing ongoing education on AI ethics principles, bias detection techniques, and regulatory requirements. Training programs must address both technical aspects of implementing fairness measures and broader ethical considerations including the societal impact of AI decisions, the importance of diverse perspectives in AI development, and the responsibility of technologists to consider the broader implications of their work beyond immediate business objectives.

Economic and Business Value of Ethical AI

Ethical AI implementation delivers significant economic and business value beyond regulatory compliance, creating competitive advantages through enhanced customer trust, reduced legal and reputational risks, improved decision-making quality, and access to broader markets that value responsible AI practices. Organizations implementing comprehensive ethical AI frameworks report improved stakeholder relationships, reduced regulatory scrutiny, and enhanced brand reputation that translates into business growth and customer loyalty. The business case for ethical AI includes risk mitigation benefits including reduced liability exposure, improved talent acquisition and retention among ethically-minded professionals, and access to partnerships and markets that require demonstrated commitment to responsible AI practices, ultimately creating sustainable competitive advantages that support long-term business success.

Future Directions and Emerging Trends

The future of fair and transparent AI will be shaped by emerging technologies including quantum computing for privacy-preserving analytics, advanced causal inference techniques for better understanding of AI decision-making, and next-generation explainability methods that provide intuitive explanations for increasingly complex AI systems. Emerging trends include the development of universal fairness metrics that work across different domains and cultures, automated ethics systems that can detect and address ethical issues without human intervention, and international standards for AI ethics that enable global cooperation on responsible AI development. The evolution toward more sophisticated ethical AI will require continued collaboration between technologists, ethicists, policymakers, and affected communities to ensure that AI systems serve humanity's best interests while enabling innovation and economic growth.

- Quantum-Enhanced Privacy: Quantum computing enabling ultra-secure privacy-preserving AI analytics and explanation generation

- Automated Ethics Systems: AI systems that can detect, assess, and address ethical issues autonomously with minimal human intervention

- Universal Fairness Metrics: Cross-cultural and cross-domain fairness measures that work consistently across diverse contexts and applications

- Causal Explainability: Advanced causal inference techniques that provide deeper understanding of AI decision-making processes

- Global Ethics Standards: International frameworks for AI ethics that enable cooperation and consistency across different regulatory jurisdictions

Building Collective Responsibility for Ethical AI

Creating truly fair and transparent AI systems requires collective action across governments, businesses, academia, and civil society to establish shared standards, collaborative frameworks, and accountability mechanisms that ensure AI development serves the broader public interest while enabling innovation and economic growth. Governments must create balanced regulations that protect citizens while enabling innovation, businesses must integrate ethical principles into their operations and competitive strategies, and academic institutions must prepare the next generation of AI professionals with strong ethical foundations alongside technical skills. The success of ethical AI depends on recognizing that responsibility for AI outcomes is shared across multiple stakeholders, requiring ongoing dialogue, collaboration, and commitment to continuous improvement in how we develop, deploy, and govern AI systems in service of humanity's best interests.

Conclusion

Building fair and transparent AI systems represents one of the most critical challenges and opportunities of 2025, requiring systematic approaches to explainability, bias mitigation, accountability, and governance that ensure artificial intelligence serves humanity equitably and responsibly while enabling innovation and economic growth across diverse sectors and applications. The maturation of ethical AI from theoretical principles to practical implementation frameworks demonstrates that fairness and transparency are not barriers to AI adoption but essential foundations for sustainable, trustworthy AI systems that create lasting value for organizations and society. As regulatory requirements continue to evolve and stakeholder expectations for ethical AI increase, organizations that proactively implement comprehensive fairness and transparency measures will establish competitive advantages through enhanced trust, reduced risks, and superior decision-making capabilities that enable long-term success in an increasingly AI-driven economy. The future of AI depends on our collective commitment to developing systems that augment rather than replace human judgment, respect individual rights and dignity, and contribute positively to social equity and human flourishing while maintaining the performance and innovation necessary for addressing complex global challenges. The journey toward fair and transparent AI requires ongoing collaboration between technologists, ethicists, policymakers, and affected communities, recognizing that the development of responsible AI is not a destination but a continuous process of learning, improvement, and adaptation that ensures AI systems remain aligned with evolving human values and societal needs throughout their lifecycle and impact.

Reading Progress

0% completed

Article Insights

Share Article

Quick Actions

Stay Updated

Join 12k+ readers worldwide

Get the latest insights, tutorials, and industry news delivered straight to your inbox. No spam, just quality content.

Unsubscribe at any time. No spam, ever. 🚀